I tried SRE for a service as an embedded SRE

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

This article briefly explains how SRG, a horizontal organization, creates and maintains an SRE culture in specific teams. We will also introduce materials used to explain how to achieve this to the business side, as well as OSS for creating the culture, so we hope this article will be helpful in introducing SRE.

Goal of this article⚠ Before you implement SREFind Toil etc.communicationEstablishing an incident response flow1. Discuss quality of service with the business sideMaterials used when explaining to the service provider2. Determine the Critical User Journey (CUJ)3. Determine SLI from CUJWhat metrics do SLIs refer to?4. Write your SLO queries5. Target SLO/Window and Error BudgetAbout the Target Window6. When the error budget runs out7. Instilling SRE, regular meetings, etc.Infuse SREs throughout the serviceTipsHow do I alert on SLOs?Error Budget Burn RateConclusion

Goal of this article

We will introduce the journey of SRG, a horizontal organization, as they joined the DotMoney service as Embedded SREs (Site Reliability Engineers) and worked to build an SRE culture and improve service quality.

This article is based on my personal method, and it may not be applicable to many situations. Therefore, I hope that you will find this method to be an option.

⚠ Before you implement SRE

When I joined as an Embedded SRE, rather than just talking about SRE right away, I did various things to gain the trust of the service department.

This was my first time as an SRE.And the service side may tire out before they can reap the benefits of SRE.The goal is to mitigate this somewhat by gaining your own credibility first.

The first thing I did was reduce toilet paper and improve communication.

Find Toil etc.

I discovered Toil while trying to understand the infrastructure configuration of the service and investigating the deployment flow.

for example,

- Completely unmaintained EC2 and IaC

- Excessive resource allocation leads to cost waste

- Long-running deployment flow

- There is no response flow when an incident occurs

etc.

Since the purpose of this article is SRE, I will not go into details, but by migrating from EC2 to Fargate, we were able to demonstrate clear numerical results on the service side regarding EC2 and Ansible, cost waste due to excessive resource allocation, and improvements to the deployment flow.

communication

At DotMoney, there are daily server-side evening meetings and a general meeting once a week, so I attend those, actively review PR for IaC, and (obviously) respond to incidents.

Establishing an incident response flow

In order to ensure service quality, one of the responsibilities of SRE is to establish an incident response flow and reduce MTTR (Mean Time To Repair).

And we need to continue that culture.

The incident response flow document was old and not functioning according to that flow, so we established it from scratch, including the business flow.

Here, we have been working on implementing on-call, establishing an incident response flow, and using Datadog Incident to measure MTTR and operate postmortems.

1. Discuss quality of service with the business side

This is impossible to achieve without cooperation from the business side to maintain service quality.

In particular, it would be difficult to take action after the error budget has been depleted without the permission of the product manager.

Materials used when explaining to the service provider

These are the materials used to explain SRE at a general meeting that included engineers and business representatives on the service side.

I think it will be difficult to get everyone to understand everything here. I don't think many people can understand SRE in just a few minutes when they read the materials.

The goal of this meeting was to convey the concept of SRE (introducing SRE makes service quality visible and provides information for business decisions).

2. Determine the Critical User Journey (CUJ)

After giving a general understanding of SRE,Areas with the greatest business impactDetermine CUJ from.

Furthermore, instead of defining many CUJs right away, we decided on one CUJ first.

Since DotMoney is a points exchange site, the biggest business impact is

User visits the site → Opens product page list → Exchange is possible

We defined this series of steps as CUJ.

3. Determine SLI from CUJ

After determining the CUJ, we look for the SLI that serves as an indicator of the SLO.

This is also a difficult point.

What metrics do SLIs refer to?

DotMoney does not use Realtime User Monitoring (RUM), so it does not use metrics from the front end, but instead uses logs from the load balancer closest to the user.

On the other hand, if you use metrics from the front end, they will be affected by the user's network environment, and it will be difficult to filter out outliers. Also, RUM is expensive everywhere, so if you are introducing SRE for the first time, I think it would be easiest to use the metrics and logs of the load balancer closest to the user.

Ideally, if accurate data is available on the front end, it will be more reliable than metrics from load balancers, etc. This is because it can track changes in service quality due to front-end implementation.

In the article "Learn how to set SLOs" published by Cindy Quach, a Google SRE, she gives an example of using LB (Istio) without measuring at the front end.

And this Google Cloud Architecture Center article goes into detail about where to measure SLI from.

This article also states that client-side measurements involve many highly variable factors and are therefore not suitable for response-related triggers.

Of course, it is possible to measure on the front end (client) if you put in the effort, but since this is my first time working as an SRE, I decided to measure on the load balancer closest to the user, which is the easiest option.

4. Write your SLO queries

All of the CUJs this time (users visit the site → open the product page list → can exchange)"A normal response can be returned within 3 seconds."This is what we are saying.

Why 3 seconds?

We investigated response times before deciding on the SLO and found that 3 seconds seemed like a good value to be achievable at the moment.

For another of our services, we actually intentionally add delays to API responses and set a time based on our own experience before the user experience is compromised.

Let's take the Datadog query currently used by DotMoney when a user visits the homepage as an example.

DotMoney is frequently subjected to DoS attacks, so we filter out DoS-related requests and other suspicious requests.

Suspicious user agents change on a daily basis, so even though there is no actual impact on service quality, the SLO value will continue to get worse. Therefore, it is necessary to review it periodically.

@http.status_code:([200 TO 299] OR [300 TO 399])5. Target SLO/Window and Error Budget

When we actually calculated the current SLO using the query in the previous section, we found that it was around 99.5%.

Therefore, I set all Target SLOs to 99.5%.

The Target SLO can be flexibly lowered or raised.

It's important to set an appropriate value initially and then review it regularly.

Note that not spending your error budget is not a good thing.

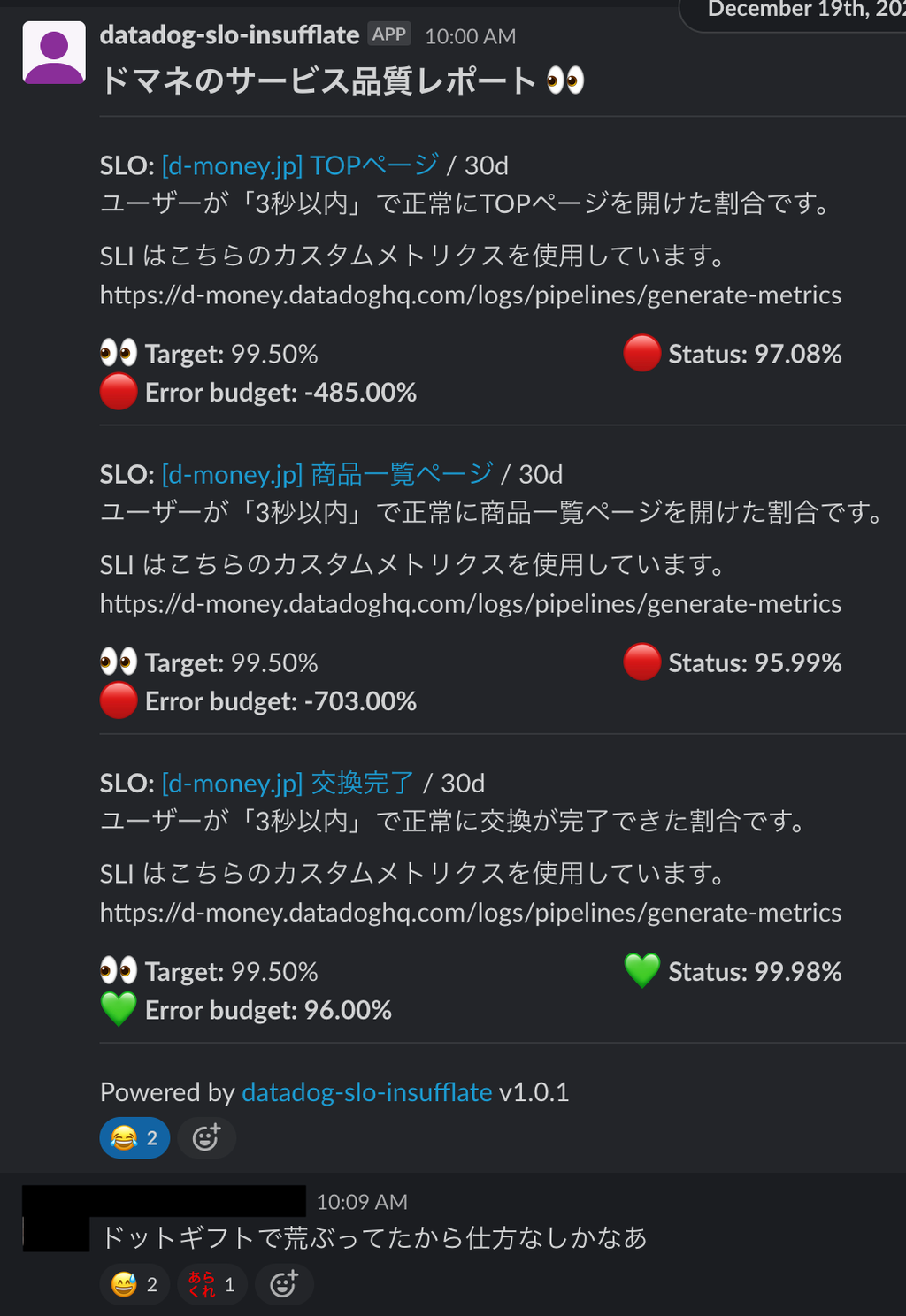

As you can see in the image above, the error budget for the "Replacement Complete" SLO monitor is completelyNot consumedYou will realize that.

At first glance, not consuming the error budget may be praiseworthy, but in other words, it can be seen as "is it because there are fewer deployments?" or "are they not taking on technical challenges?"

The error budget is the budget allocated to the "technical challenge" against the Target SLO.

If you have a surplus error budget, try tightening your target to bring it to exactly 0% of the target window. Alternatively, try increasing the number of deployments and taking on technical challenges.

About the Target Window

The Target Window is determined by the regular frequency and development cycle.

DotMoney's deployment cycle is approximately once a week, but since I am the only Embedded SRE working at DotMoney, I decided that a Target Window of 30 days would be best in terms of resources.

For example, if your service is deployed every Wednesday, you can set regular SLO meetings on Thursdays with a one-week Target Window, allowing you to discuss changes to SLOs and error budgets due to feature releases.

Considering how things will behave when the error budget runs out, which I will explain in the next section, I think it would be quite difficult to set the Target Window to one week.

6. When the error budget runs out

With the consent of the business side, DotMoney will decide how to proceed when the error budget is exhausted.

"Except for responding to production outages, fixes to restore reliability, and feature releases involving external companies, we prohibit feature releases when the error budget is exhausted."

We decided to do so.

DotMoney makes an exception because there are times when it is absolutely necessary to release something due to relationships with external companies.

Additionally, we also make exceptions when prohibiting the release of a feature if we have the resources to make modifications to restore reliability.

This will slow down feature releases, but it will not prevent them.

In fact, when the error budget ran out, we were close to releasing a new feature, but we were able to secure the resources of one DotMoney engineer to restore reliability, and two of us, including myself, worked on modifications to restore reliability while simultaneously releasing the new feature, so I think the way we handled the situation when the error budget ran out was quite good.

7. Instilling SRE, regular meetings, etc.

SRE is a never ending culture.

Once SRE is introduced, it is necessary to hold regular reviews and ensure that SRE is fully implemented within the service.

We regularly not only check the error budget, but also review what we have done so far to determine whether the SLI is correct, whether the SLO is too lenient or too strict, etc.

The regular meeting I'm referring to is the one I belong to.SRGInstead of doing it by myself, Embedded SRE and DotMoney engineersIncluding the business sidepeopleWe will hold regular meetings.

The goal is to spread SRE by involving people on the DotMoney side.

Infuse SREs throughout the service

When I thought about how to instill SRE throughout the entire service, I realized that it would be quite difficult not only for regular meetings, but also for those in charge of CS, the front office, and the business side.

So we created a tool that posts an SLO summary once a week to a random channel that everyone on the service side joins.

datadog-slo-insufflateIt is available as a container image, so it is easy to use.

The number of reactions is still small, but more people are responding than at the beginning.

It's a tool that's better to have than not to have.

Speaking of outages, the SLO values were getting worse day by day.

Then one day, a large-scale outage occurred.

This was before we introduced the error budget burn rate, which I'll introduce in the Tips section below, so we didn't notice it in advance, but we learned that if the SLO values get worse, one day a major outage will occur.

Tips

How do I alert on SLOs?

We have not set up any alerts for the SLOs this time, and we do not plan to do so in the future.

This is because we have set an alert for the error budget burn rate, which we will explain later.

Error Budget Burn Rate

Burn rate is a term coined by Google that is a unitless value that indicates how quickly your error budget is consumed relative to the target length of your SLO. For example, if your target is 30 days, a burn rate of 1 means that at a constant rate of 1, your error budget will be completely consumed in exactly 30 days. A burn rate of 2 means that at a constant rate, your error budget will be depleted in 15 days, and a burn rate of 3 means that your error budget will be depleted in 10 days.

Datadog's documentation on burn rate is easy to understand.

This error budget burn rate allows you to alert directly on the error budget, eliminating the need to alert on SLOs.

It also kills two birds with one stone by eliminating alerts for 5xx error rates, which tend to be noise alerts.

The actual alerts set up on DotMoney look like this.

burn_rate("").over("30d").long_window("1h").short_window("5m") > 14.4message

Since it is not possible to set both short_window and long_window simultaneously via the web, I set it up via Terraform (API). It may be set up by the time this article is published.

The reason why

Using only short_window increases the frequency of alerts and makes them more susceptible to noise, so adding long_window to the conditions reduces noise and makes the alerts more credible.

Conclusion

This article is like an account of my experience as an SRE beginner and how I introduced SRE to a service.

Introducing SRE is a high hurdle, but I think it's important to give it a try.

Of course, SRE is both a culture and an organization, so it's not a story that ends just because it's been introduced; the story is likely to continue.

I don't fully understand SRE myself, but I think the quickest way to gain knowledge about SRE is to introduce it without overthinking it at first and then improve it every day. (It's hard to see what the future holds and there's a lot of mundane work involved...)

What I'm thinking about recentlyVisualize the link between SRE and business impactI would like to do this.

For example, I would like to visualize the difference in sales revenue when the error budget is used up and becomes negative, and sales revenue when the error budget is used properly (or has a surplus), and decide how to do this, and make it clear that SRE truly has an impact on business, from a vague world to a reality.

If anyone has already done this, please let me know.

SRG is looking for people to work with us.

If you're interested, please contact us here.