Deep Dive into VPC CNI: An In-Depth Analysis of IPAMD and Security Groups For Pods

Mr. Kumo Ishikawa (Service Reliability Group (SRG) of the Technology Headquarters)@ishikawa_kumo)is.

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

add cmd: failed to assign an IP address to containerAWS VPC Add-onstructureLife CycleCNI Plugin DetailsIPAMD Plugin Detailsbig pictureAbout the "failed to assign an IP address to container" errorSecurity Groups For PodsstructureSetting method and ENV explanationconstraintsReferencesConclusion

AWS VPC Add-on

The AWS VPC CNI plays a central role in the networking configuration of EKS, and its native integration with AWS VPC streamlines network management on EKS.

The AWS VPC CNI consists of the following components:

- CNI Plugin

In accordance with the CNI specification, communicate with IPAMD via gRPC to obtain an IP and perform network configuration.

- IPAMD Plugin

Manage AWS ENI on each node and pre-assign IP addresses for quick pod startup.

structure

The VPC CNI mainly provides the functions of Pod IP allocation and network routing.

- Pod IP allocation

IP address assignment to Pods is done using EC2 ENI and IPAMD, the general flow is as follows:

- Network Routing

- Pod ↔ External network: IPTABLE

- Pod ↔ Pod: routing table

Network routing between Pods and from Pods to the outside world is configured using multiple routing tables and IPTABLEs. They are used for the following reasons:

Like regular SNAT, this is performed when packets pass through a virtual network interface (veth) and an EC2 ENI (e.g. eth).

Communication between Pods and from Pods to the outside world is mainly carried out via veth and eth, and various routing tables are implicitly applied.

Life Cycle

There are seven main types of VPCCNI binaries:

| Binary | explanation |

|---|---|

| aws-k8s-agent | IPAMD gRPC server |

| aws-cni | Implementation of CNI Plugin, mainly managing settings such as ENI/IP and Linux Network within VPC |

| grpc-health-probe | Health probe checking tool for IPAMD |

| cni-metrics-helper | Support tool for collecting metrics and putting them into CloudWatch |

| aws-vpc-cni-init | () An initialization component to ensure that prerequisites are in place, such as system parameters, IPV6, and replication of each binary file. |

| aws-vpc-cni | In the pastIt currently exists as a tool for launching and managing the lifecycle of IPAMD. |

| egress-cni | Implementing a CNI Plugin, primarily using SNAT to manage specific routing rules and policies for outbound traffic |

entrypoint.shExecution order

/host/opt/cni/bin

- Launch IPAMD

grpc-health-probe

RunIt is currently commented out because it is used as an InitContainer.

InitContainer- Copy the CNIConfig file to the specified directory on the kubelet.

- Wait for IPAMD to start

grpc-health-probeCNI Plugin Details

aws-cniplugins/routed-eni/cni.goFile structure

Registering the CNI Plugin

cmdDelPluginMainWithError

- Specifications of the CNI Plugin itself:https://www.cni.dev/docs/spec/#cni-operations

Add command

- Loading the settings

add cmd: error loading config from args

POD_SECURITY_GROUP_ENFORCING_MODE- Reading Kubernetes arguments

cniTypes.LoadArgs- Configuring a gRPC connection to IPAMD

- Add Network API Request

AddNetworkAddNetwork- Implementing network settings

driverClient.SetupBranchENIPodNetworkPodVlanIdDel command

- Loading the settings

Same as the Add command

- Reading Kubernetes arguments

Same as the Add command

- Delete attempt with previous results

tryDelWithPrevResult- Configuring a gRPC connection to IPAMD

- Network deletion API request

DelNetwork- Cleaning up network resources

This will release the Pod IP, etc. If an SGP is used, it will also delete the Branch ENI.

IPAMD Plugin Details

pkg/ipamd/*File structure

IPAMD processing overview

- The initialization process includes:

- Checking connection with k8s API Server

- k8s API Client initialization

- Initializing the Recorder to emit k8s Events

- Initializing the IPAMD Client

NodeIPPoolManager

ipamd.log- Starting Prometheus Metrics API Server in a separate goroutine

/metrics:61678- Starting the introspection API Server in a separate goroutine

- Get ENI information from IPAMD Context

- Get a specific ENI setting name from the node information

- Get debug information for network settings

- Get debug information for IPAMD preferences

It will also be started if not disabled by an environment variable. This introspection API is used to monitor and diagnose the operation status of IPAMD. The introspection API currently provides four main functions:

- Starting the gRPC Server

big picture

failed to assign an IP address to container

AddNetworkAddNetwork processing overview

- Processing when PodENI is enabled (using SGP)

vpc.amazonaws.com/pod-eni"vpc.amazonaws.com/pod-eni"

The SGP target Pod will be modified by VPCCNI in the following two places:

- If the IP does not exist in the annotation, get the IP from the EC2 ENI Datastore

- If an IP exists in the annotation, add that IP to the existing VPC IP Pool.

- Respond to IP addition to CNI Plugin

In the above process, if an error occurs at any point, the Pod startup is delayed,The error may appear repeatedly.

WARN| Log messages | explanation | Relevance to SGP activation | Guessing the cause |

|---|---|---|---|

| The version information held by the CNI side and the IPAMD side does not match. | none | DaemonSet update timing issue | |

| The Pod with the Pod name received by IPAMD does not exist, or annotation information acquisition failed. | can be | Bugs in the Annotation and Resource Profile management system | |

| There is no Trunk ENI installed in the node, or LinkIndex information cannot be obtained. | none | Some kind of bug | |

| Annotation information parsing failure | can be | Parser and usage bugs |

More than 50 similar issues have been reported from 2020 to 2022. The main cause is believed to be a bug in the AWS VPC CNI itself.

There are also many other causes, such as:

- Using an EC2 instance type that does not support SGP (e.g., using a t series instance)

- The number of Pods that can be created by Trunk ENIBranch ENI countexceeded.

Security Groups For Pods

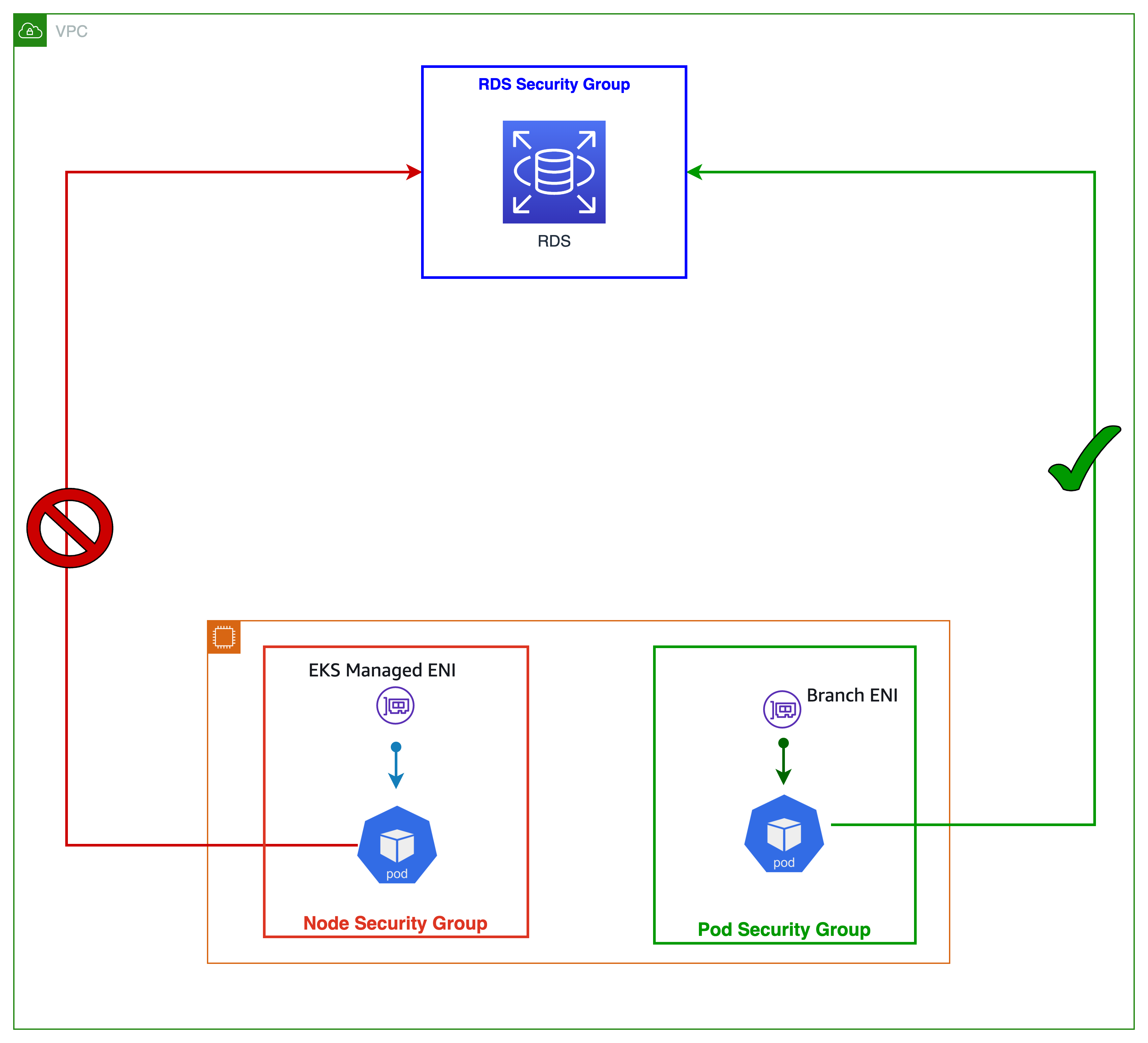

This feature applies security groups at the pod level instead of the node level, which allows you to strengthen communication security between EKS pods and other services in your VPC.

A common use case is controlling communication between EKS Pods and RDS/ElastiCache.

structure

aws-nodeSetting method and ENV explanation

For VPCCNI v1.14 and later, the required steps to enable SGP are to set the following settings for the container target.

DaemonSet

ENABLE_POD_ENI=true

POD_SECURITY_GROUP_ENFORCING_MODE=standard

Before 1.10, when using Liveness/Readiness Probes, you also need to set the following settings for initContainers:

DISABLE_TCP_EARLY_DEMUX=true

POD_SECURITY_GROUP_ENFORCING_MODEAbout ENFORCING_MODE

- Strict Mode

- All inbound/outbound traffic to a Pod with a SG is controlled solely by the SG of the Branch ENI, while all inbound/outbound traffic between Pods is within the VPC.

- Standard mode

- (Within VPC) All communications are subject to the SG of both the Primary ENI and Branch ENI.

- All inbound/outbound traffic to pods with SG is controlled only by the SG of the branch ENI. However, inbound/outbound traffic from the kubelet is controlled by the Node SG, and the rules of the SG of the branch ENI do not apply.When using SGP, you must configure the NodeSG and Branch ENI SG for the Pod at the same time.

- The following conditions apply to outbound traffic to outside the VPC (External VPN/Direct Connection/External VPC).

AWS_VPC_K8S_CNI_EXTERNALSNATAWS_VPC_K8S_CNI_EXTERNALSNAT

About WARM_* and IP_COOLDOWN_PERIOD

IP_COOLDOWN_PERIODAny of the WARM targets do not impact the scale of the branch ENI pods so you will have to set the WARM_{ENI/IP/PREFIX}_TARGET based on the number of non-branch ENI pods. If you are having the cluster mostly using pods with a security group consider setting WARM_IP_TARGET to a very low value instead of default WARM_ENI_TARGET or WARM_PREFIX_TARGET to reduce wastage of IPs/ENIs.

constraints

- As mentioned above, when creating a SecurityGroupPolicy, the SecurityGroup set for the Pod must include both the Branch ENI SG and the Node SG.

- Since trunk ENIs are included in the number of ENIs that a node can have, if the number of ENIs on a node reaches the number of ENIs supported by the instance type in use, trunk ENIs will not be created, and pods with SG applied on the node will not be created.

- The number of Branch ENIs (i.e., the number of Pods) that can be created with a runk ENI is limited. This is not listed in the documentation, so please refer toSource codePlease calculate the actual number from the map below. The calculation method is as follows.

IsTrunkingCompatible: true

- Since restarting a Pod after applying SGP takes longer than usual, please use the most appropriate Deployment Update Strategy.

References

Conclusion

VPCCNI has finally become usable as a Network Policy Engine. It has continued to evolve since 2019, and has recently become more user-friendly. We look forward to the future of VPCCNI.

The official SGP tutorial document shows the following in a red frame:Importantcontains important information and constraints that are worth reviewing.

Understanding the source code related to IPAMD and SGP has greatly improved the accuracy of my troubleshooting. I would like to continue using this learning method in the future.

SRG is looking for people to work with us.

If you're interested, please contact us here.

SRG runs a podcast where we chat about the latest hot topics in IT technology and books. We hope you'll listen to it while you work.