Once you try OpenCode, you won't want to go back

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

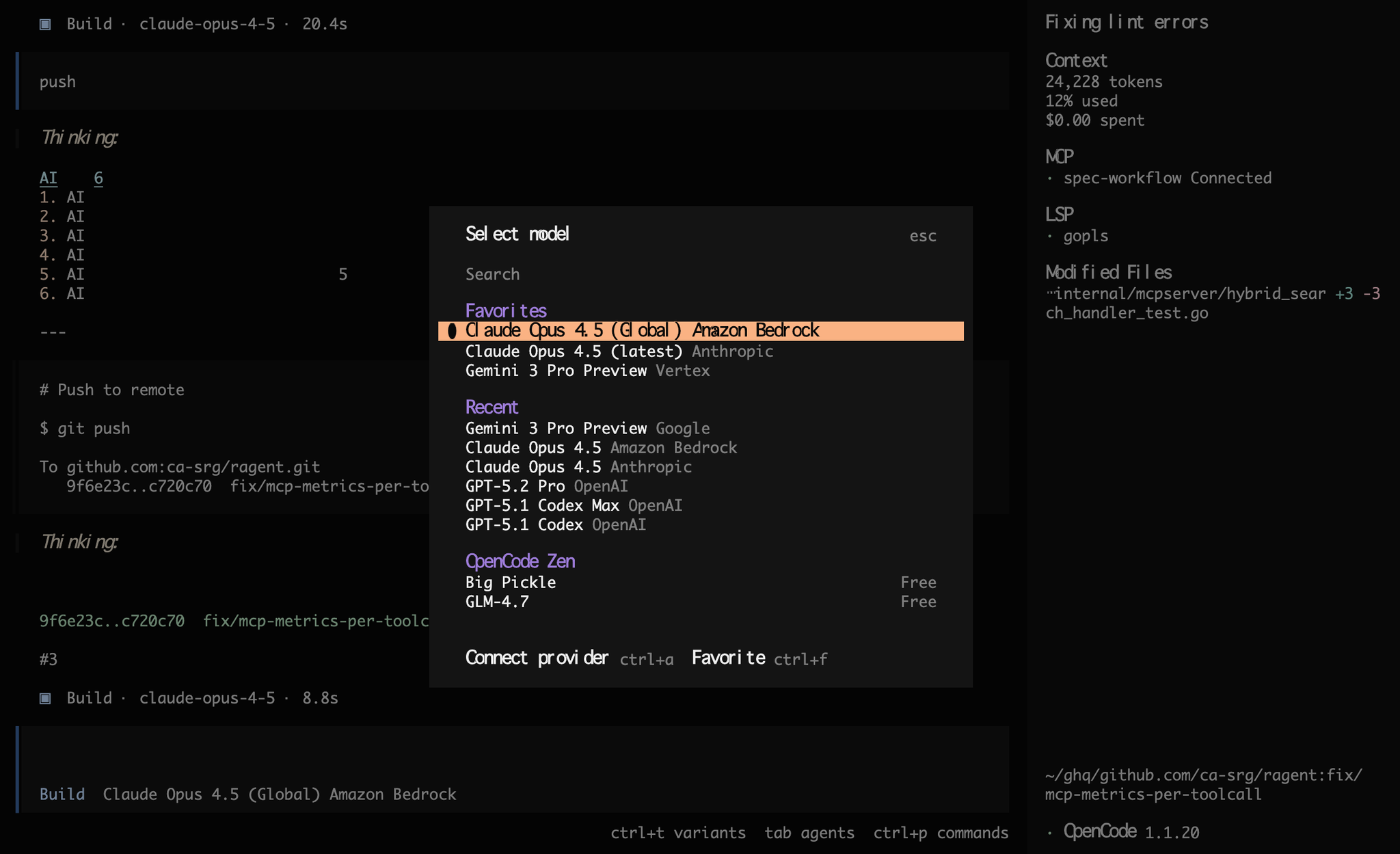

This article examines the TypeScript-based AI tool "OpenCode," and explains its benefits of enabling developers to maximize their productivity without stopping to think, by providing flexibility in cross-model processing, solving issues such as response speed and rate limits, and more.

AI-powered coding assistance tools have been evolving rapidly in recent years.

Among them, "OpenCode" is generating a quiet enthusiasm among engineers.

OpenCode is more than just a CLI tool; it offers multiple interfaces, including a Desktop App and a Web UI (opencode web).

OpenCode is growing fastOpenCode supported providersWe are also involved in the standardization of Agent Skills.Incredible drawing speed and responsivenessPlan ModeAutomatic model switching function depending on the taskSession sharing featureAutomatically used subagentsNative LSP supportModel switching and assets without stopping developmentOpenCode and Third-Party SubscriptionsAnthropicOpenAI SubscriptionGitHub Copilot subscriptionAntigravity SubscriptionConclusion

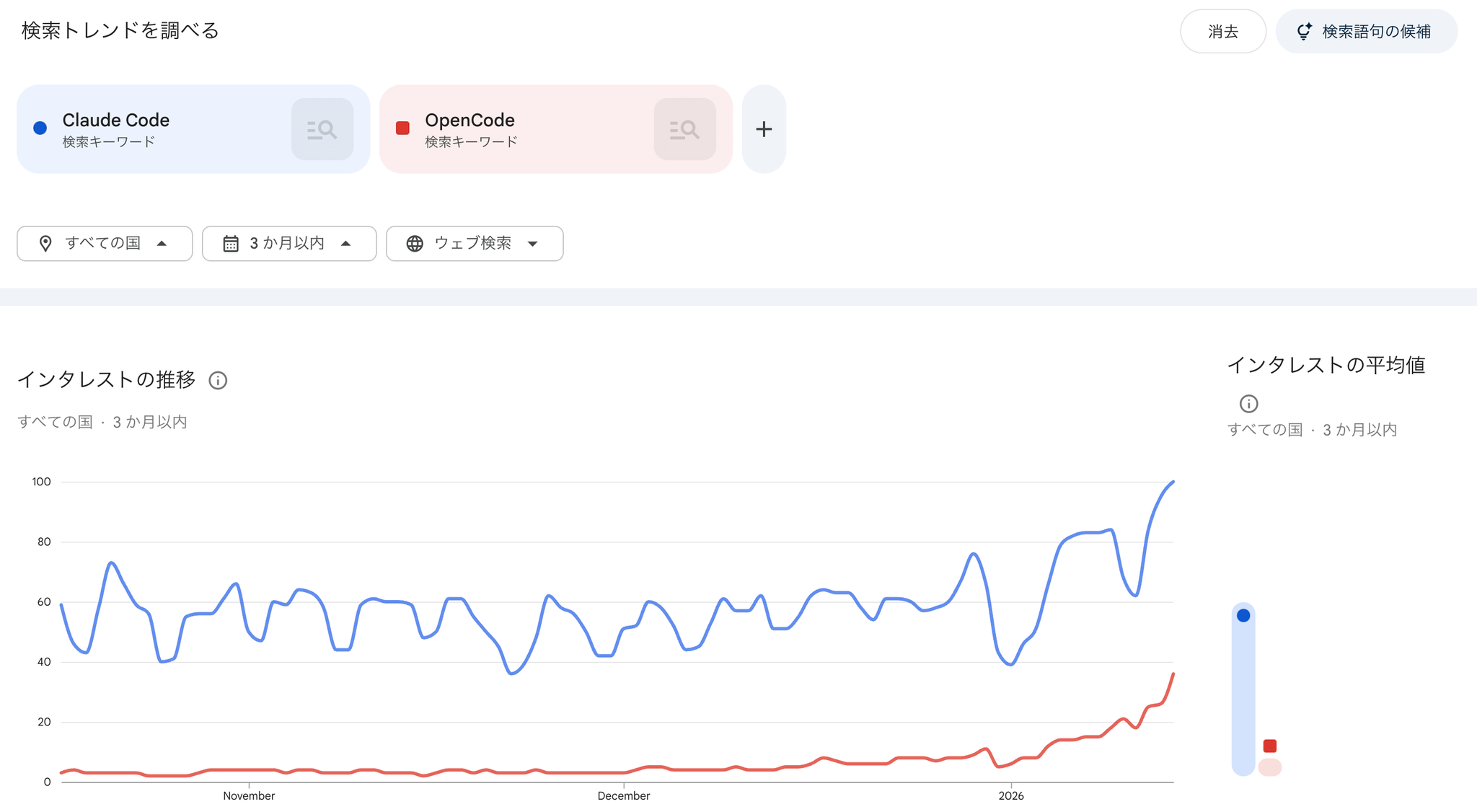

OpenCode is growing fast

According to Google Trends, the number of searches has been increasing rapidly since 2026.

Star is also at nearly 70k, so it's safe to say that it's a tool that's gaining momentum right now.

This time, after using this tool every day for about three months, I will dig deeper into why I feel like "once you start using it, you can't go back."

OpenCode supported providers

- OpenCode Zen (a pay-as-you-go service provided by OpenCode)

- OpenCode Black (a subscription service provided by OpenCode)

- 302.AI

- Amazon Bedrock

- Antigravity(Google)

- There are some points to note, which will be explained later.

- Anthropic

- There are some points to note, which will be explained later.

- Azure OpenAI

- Azure Cognitive Services

- Baseten

- Cerebras

- Cloudflare AI Gateway

- Cortecs

- DeepSeek

- Deep Infra

- Fireworks AI

- GitLab Duo

- GitHub Copilot

- Google Vertex AI

- Groq

- Hugging Face

- Helicone

- llama.cpp

- IO.NET

- LM Studio

- Moonshot AI

- MiniMax

- Nebius Token Factory

- Ollama

- Ollama Cloud

- OpenAI

- OpenCode Zen

- OpenRouter

- SAP AI Core

- OVHcloud AI Endpoints

- Scaleway

- Together AI

- Venice AI

- Vercel AI Gateway

- xAI

- Z.AI

- ZenMux

- Custom provider

We have so many products that it's difficult to find something that doesn't work.

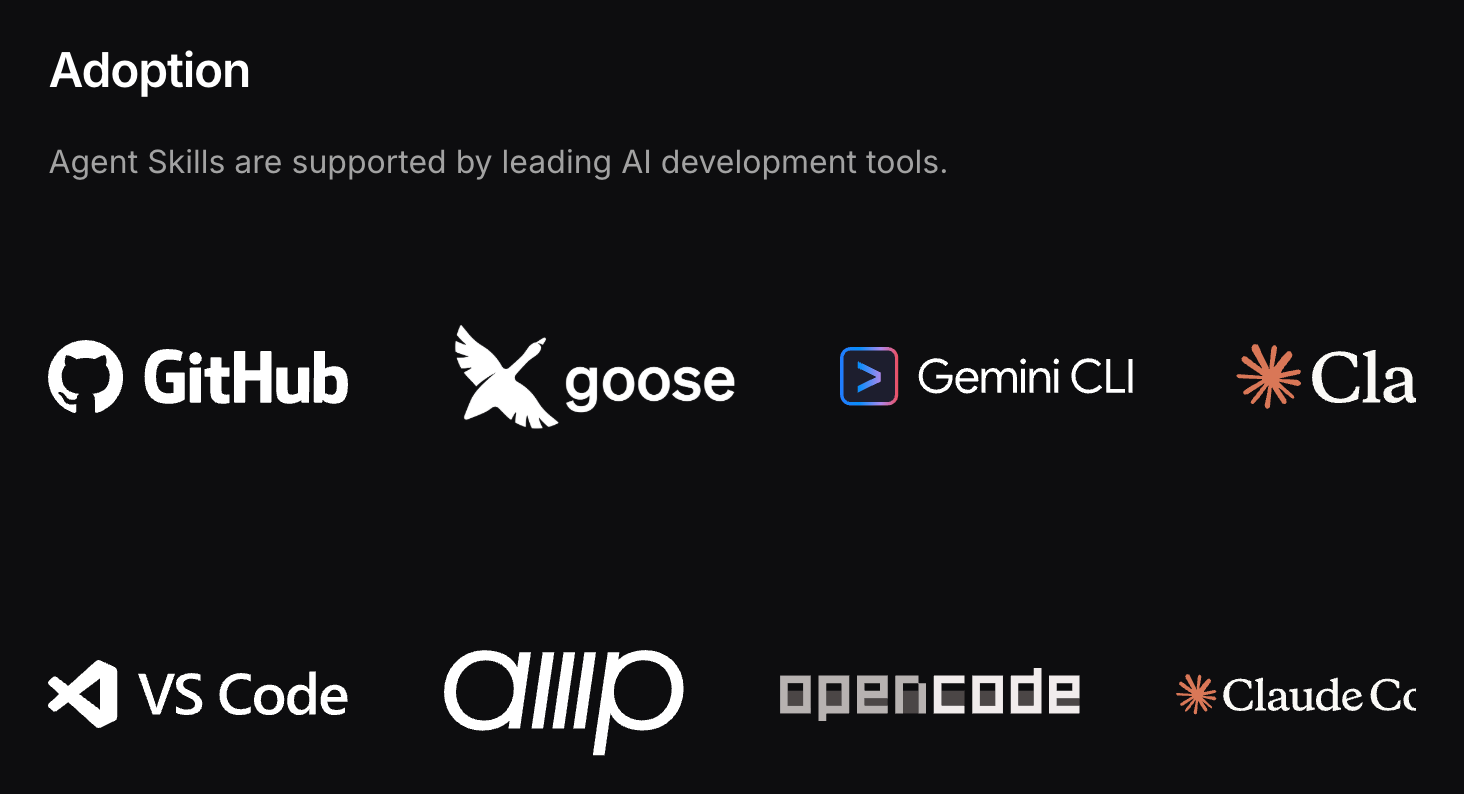

We are also involved in the standardization of Agent Skills.

Along with GitHub, Gemini, and Claude, the name OpenCode also comes to mind.

OpenCode is becoming increasingly popular overseas.

Incredible drawing speed and responsiveness

The first thing that strikes you when you start using OpenCode is how fast it is.

Among the many AI agent tools available, OpenCode's rendering speed and response time are unmatched.

For example, even when using Opus 4.5 from the same Anthropic company as the backend model, OpenCode feels noticeably faster than the official Claude Code.

This is due to highly efficient internal processing.

In packages/console/app/src/routes/zen/util/provider/anthropic.ts we add cache control when transforming requests to Anthropic.

The TUI (Terminal User Interface) on the terminal is also very light and smooth, with the process from code generation to suggested modifications running smoothly.

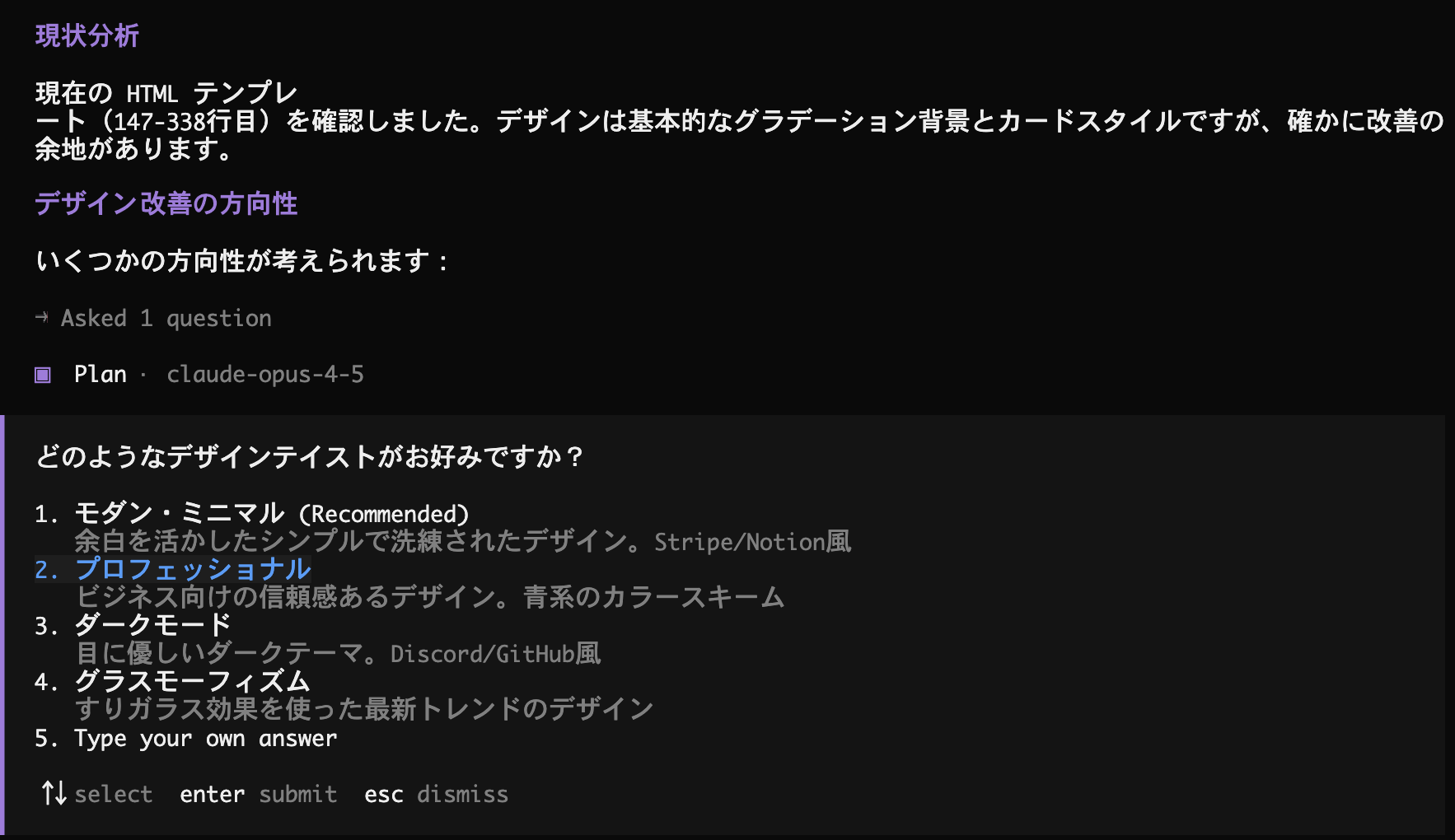

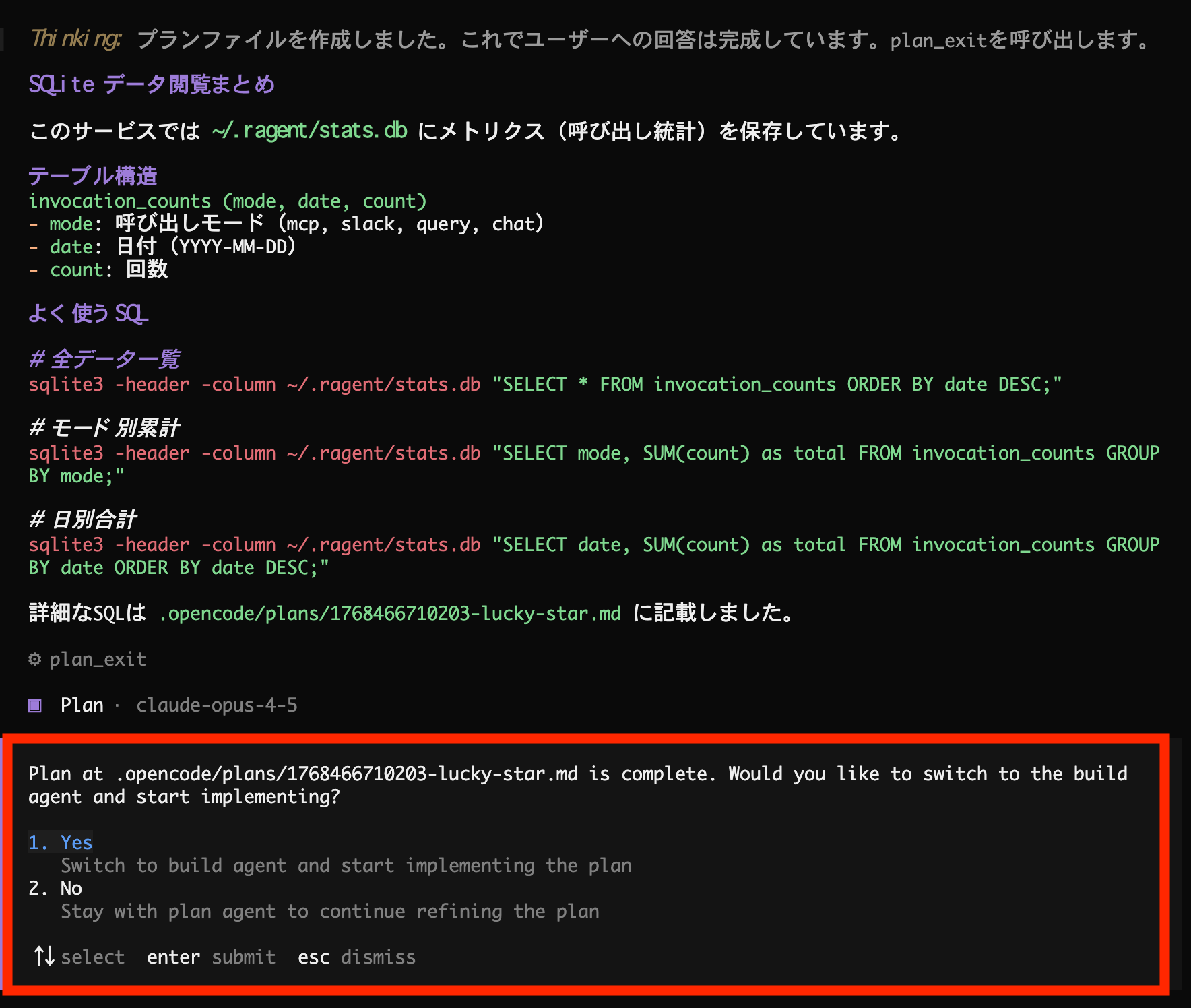

Plan Mode

It also has a "Plan Mode," which is important in the development flow. By switching between modes using the Tab key, you can first consult with the AI to solidify your implementation plan, rather than writing code.

With the January 15th update, files created during planning are now written to a file, and if you check the contents and there are no problems, the system will automatically switch from Plan mode to Build mode and implement the files.

The ability to go through this "thinking" and "implementation" process at a stress-free speed dramatically improves the development experience.

Automatic model switching function depending on the task

There are two types of OpenCode tasks:

- General (Tasks to be implemented)

- Explorer (for light tasks such as exploring files)

One of the nice features of OpenCode is the ability to automatically use lightweight models depending on the task.

getSmallModelFor example, if you are using OpenCode with a github-copilot subscription, "gpt-5-mini" will be preferred for Explorer tasks.

I think this is a very good feature in terms of rate limiting and speed.

The model selected here can also be defined by the user.

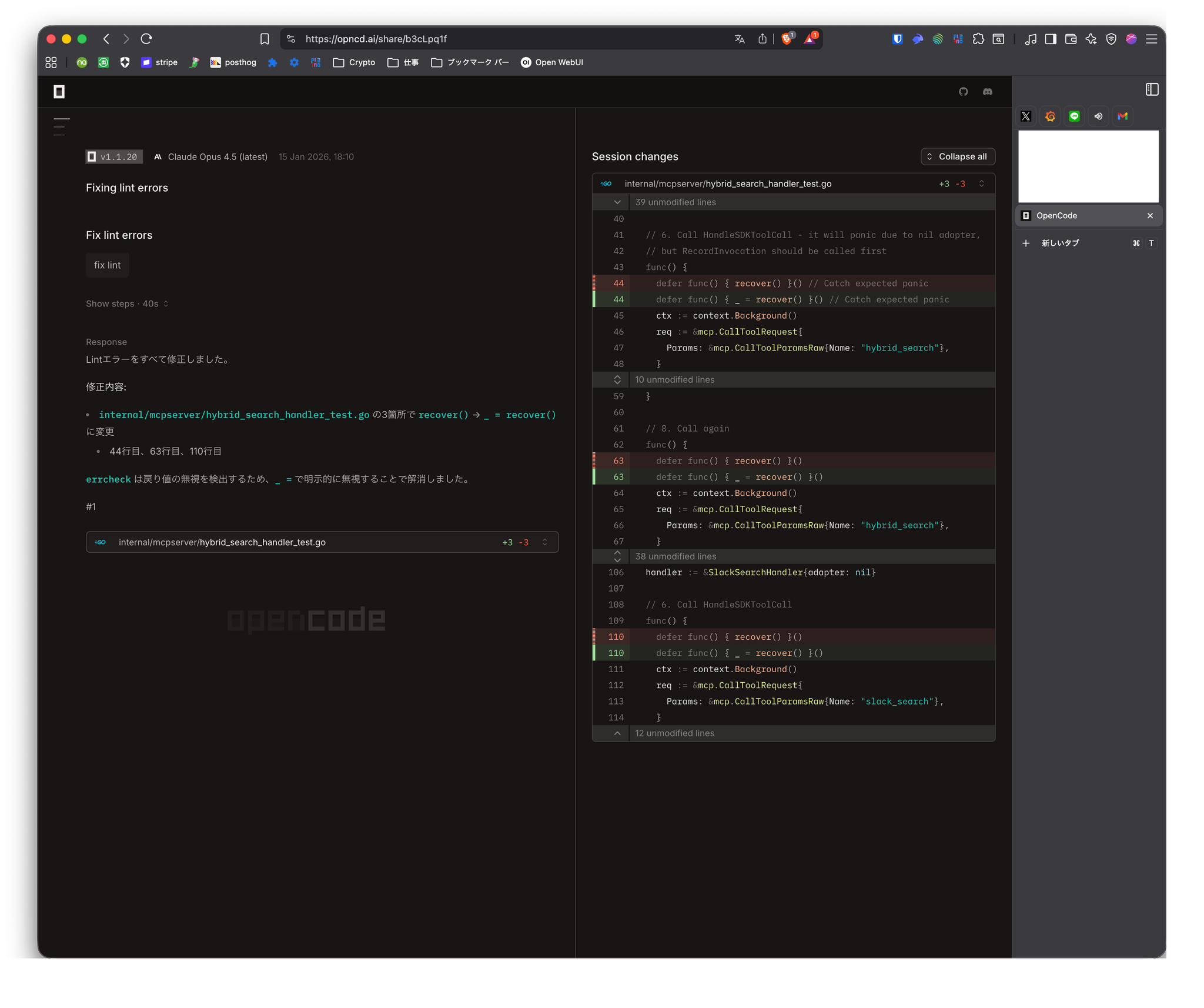

Session sharing feature

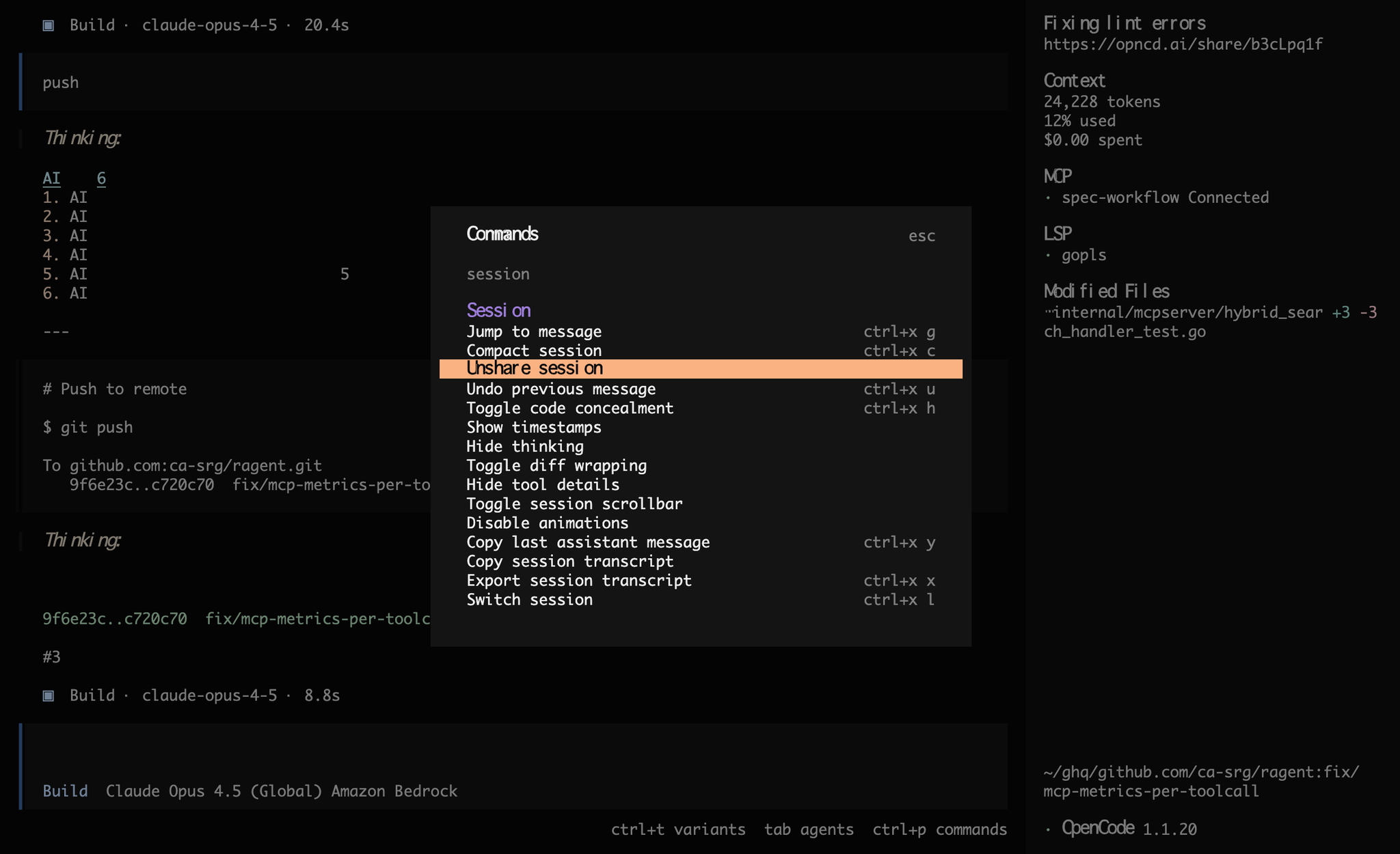

There is a function to open a list of commands with ctrl+p and share the current session, which may make it easier for developers to discuss things with each other and to debug Agent Skills, memory, and MCP.

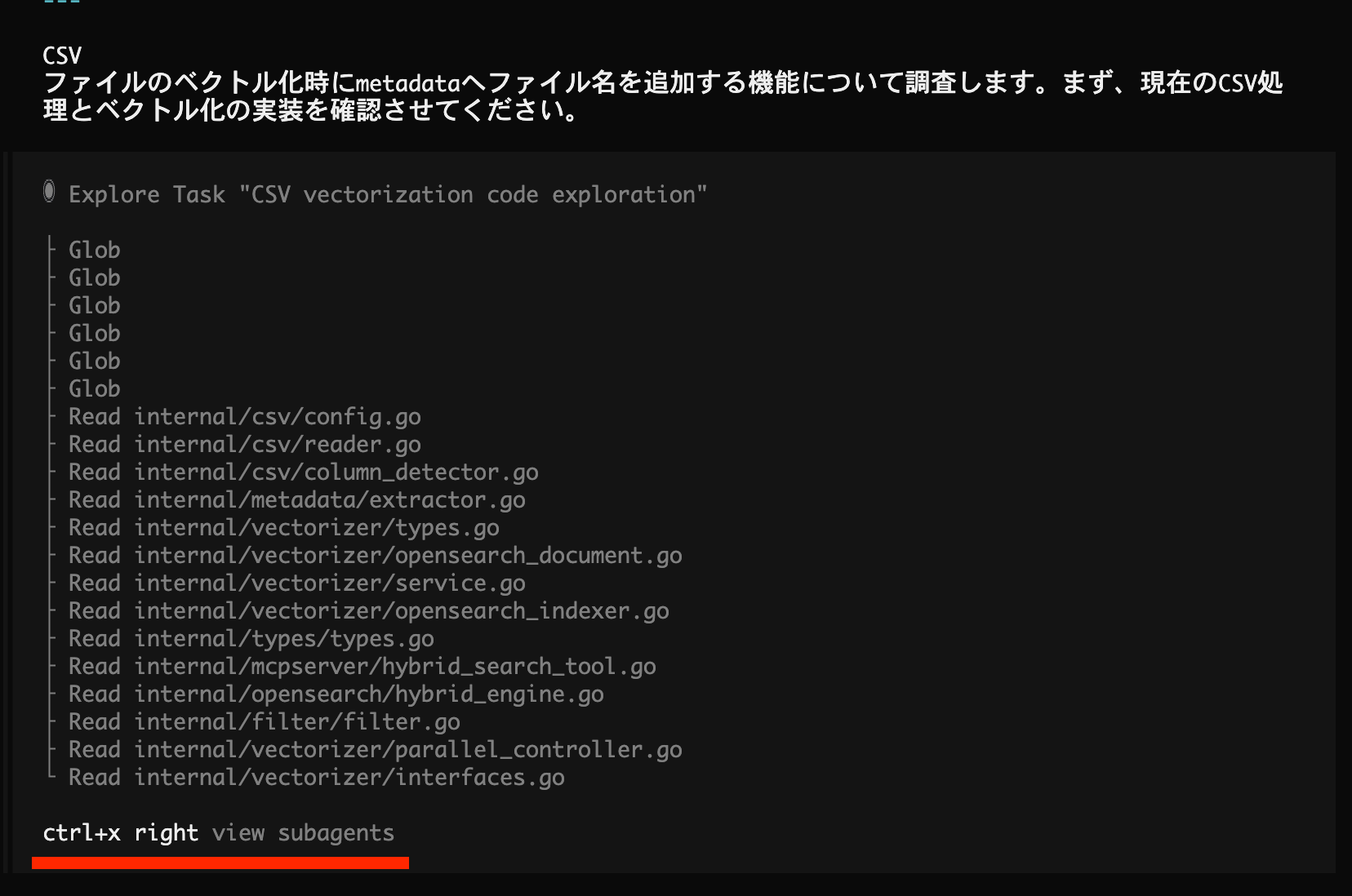

Automatically used subagents

It's now included in Claude Code, but OpenCode uses subagents automatically to explore codebases faster, performing file searches and so on.

Since it automatically uses the appropriate subagent, there is no need to write it in memory or anything like that.

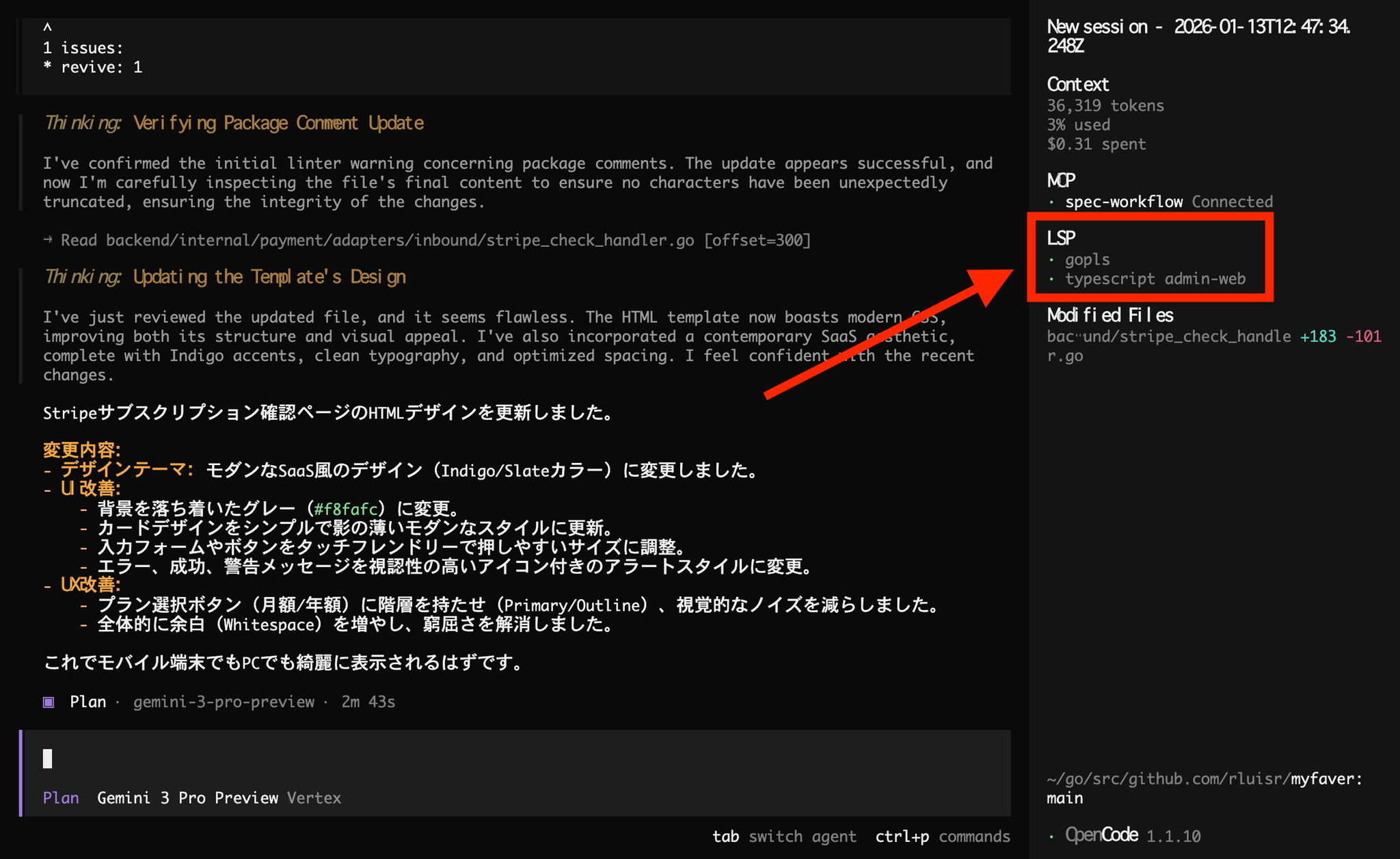

Native LSP support

Unlike Claude Code and others, OpenCode automatically applies and uses LSPs if they are installed locally.

What is noteworthy here is that even though it is a monorepo, an LSP is used for each language.

Model switching and assets without stopping development

When you are immersed in development, one of the things you want to avoid most is being interrupted by rate limits (usage restrictions).

We've all had the experience of seeing the error message "429 Too Many Requests" and having our thoughts forced to stop.

OpenCode has a very elegant solution to this problem.

/models

Even if the rate limit of the main model you are using is reached while you are working, you can switch to a different model or provider by issuing a command on the spot, allowing you to continue working while maintaining your context.

This makes it easy to continue working on OpenCode while leaving your Agent Skills and Rules as they are.

OpenCode allows users to set up and use their own API keys for OpenAI and Anthropic, but also offers managed connection options for Zen and Black.

Zen is a service that provides access to a list of models that have been tested and curated by the OpenCode team, eliminating the need for individual contracts and providing a stable development environment.

On the other hand, Black is positioned as a subscription for more advanced needs, allowing users to focus purely on the act of coding without having to worry about API management and restrictions.

I've heard recently that Codex is good for code reviews, but with OpenCode you don't have to go back and forth between CLIs; you can just switch using `/models`.

OpenCode and Third-Party Subscriptions

In short

- Claude Subscribing is a violation of Anthropic's Terms of Service

- Use with API or GitHub Copilot, Bedrock, or Vertex

- OpenAI subscription → OK

- GitHub Copilot subscription → OK

- Antigravity subscription → Unknown

Information on this topic is updated frequently, so the information is current as of January 16, 2026.

Anthropic

Using Claude Code subscriptions from third parties such as OpenCode is prohibited.

OpenAI Subscription

Unlike Anthropic, OpenAI is officially recognized.

GitHub Copilot subscription

This is also officially recognized.

Antigravity Subscription

I don't think there will be any problems using it with the Gemini API or Vertex, but it's unclear how it will work with the Antigravity subscription.

Conclusion

OpenCode is more than just "another AI CLI tool."

Its high speed, flexible ecosystem across CLI, Desktop, and Web, and model management features that keep developers in the flow are so comfortable that once you experience it, you'll never want to go back to other tools.

It is currently being actively developed as open source software, but due to its high level of completion, it has the potential to become comparable to or even surpass paid commercial tools in the future.

For engineers looking to maximize their productivity in their daily coding work, OpenCode is a tool worth trying out right now.

SRG is looking for people to work with us.

If you are interested, please contact us here.