Automated recovery workflows that leverage alert descriptions as dynamic runbooks

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

CyberAgent Group SRE Advent Calendar 2025This is the 17th article.

This article examines a mechanism for using AI to interpret Grafana alert descriptions and automatically generate recovery commands, introducing a method for achieving flexible failure response while reducing the effort required for script maintenance.

The host names and alerts mentioned in this article are personal and not related to the company to which I belong.

This time,Grafanaalerting functionality, workflow automation toolsn8n,and AIBy combining these, we verified a mechanism that can decipher the description included in the alert and automatically generate and execute recovery commands.

Background and ObjectivesArchitecture and MechanismsProcessing flowImplementation and Verification1. Register n8n Webhook as an alert destination for Grafana2. Add a routing policy for alerts3. Configuring Grafana alerts2. Building an n8n workflowWebhook contentsChecking the operation of the AI Agent NodeExecute command3. Operation verificationConsiderations and future challengessummary

Background and Objectives

Typically, when automating disaster recovery (Auto-Remediation), it is common to implement it by linking a specific script or Ansible Playbook to each alert.

However, this method requires the development and maintenance of scripts each time the number of alert types increases.

So this time, I will explain how to set up alerts in Grafana.DescriptionAs isRunbookWe tried the approach of treating it as such.

We will verify whether a flexible automated recovery flow can be achieved by using AI to interpret recovery procedures written in natural language and generate and execute appropriate Linux commands on the fly.

Grafana alerts can also specify a runbook (URL) and can be fetched using n8n, but this time we will use description.

Architecture and Mechanisms

The configuration of the verification environment this time is as follows:

- Grafana: Monitoring and alerting.

In the Description field, describe the recovery procedure in natural language.

- n8n: Receive alerts and control workflows with webhooks.

- AI (LLM): Using AI tools on n8n (such as the LangChain node), the Linux command to be executed is generated from the contents of the Description.

- Target Server: The environment in which the generated commands will be executed.

Processing flow

- Grafana detects anomalies and issues an alert.

- Alert notifications (Webhooks) are sent to n8n.

This payload contains a Description.

- n8n receives the webhook and extracts the Description text.

- The AI agent on n8n reads the Description and generates the "Linux command to perform this procedure."

- n8n executes the generated commands on the target server (or via SSH).

- Check the execution results and consider the recovery complete.

Implementation and Verification

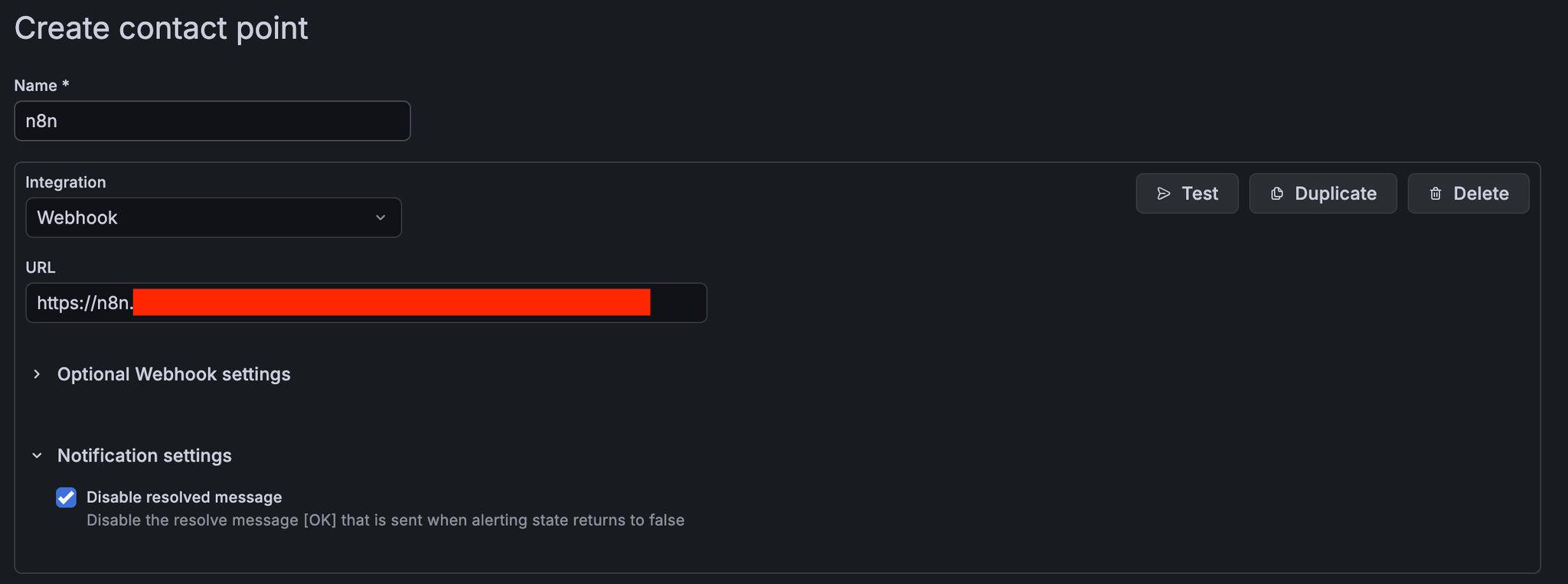

1. Register n8n Webhook as an alert destination for Grafana

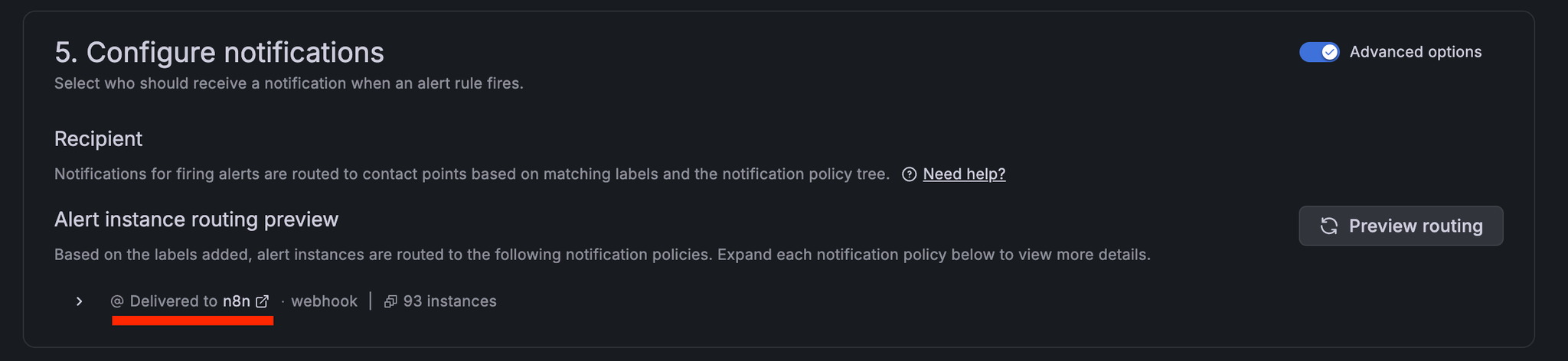

2. Add a routing policy for alerts

n8n = true

3. Configuring Grafana alerts

First, create an alert rule in Grafana.

The key points here are:Summary or DescriptionThe key is to include specific recovery steps that will serve as instructions for the AI.

For example, for a web server process monitoring alert, you would write it as follows:

- Alert Name: Nginx Down

- Description:

In this way, you can write down in English (or Japanese) what to check and how to deal with it.

This is what makes it a dynamic runbook.

Also, add a label to match the routing policy mentioned earlier.

Preview routing

2. Building an n8n workflow

On the n8n side, we created a workflow with the following node configuration:

- Webhook Node: Receives a POST request from Grafana.

{{ $json.body.alerts[0].annotations.description }}- Full prompt

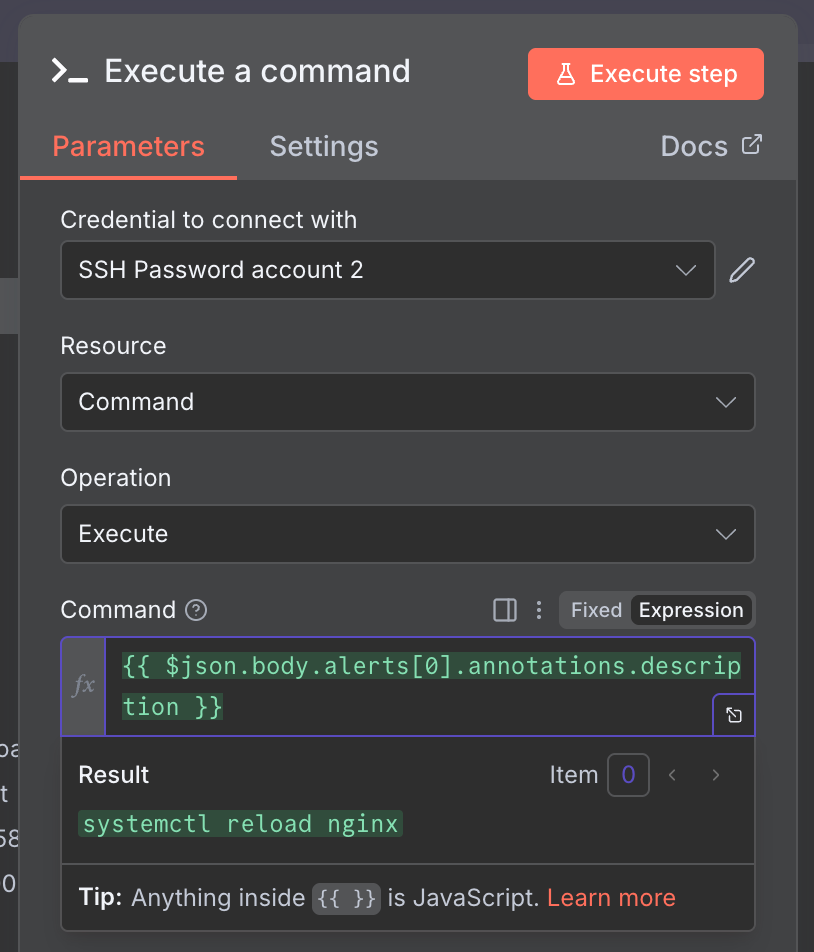

- Execute Command Node: Takes the commands output by the AI and actually executes them on the server (sometimes using an SSH node).

Webhook contents

Checking the operation of the AI Agent Node

The left pane is the input, the middle is the AI Agent Node settings screen, and the right is the AI Agent Node output.

systemctl restart nginxPrompt contents after variable expansion

Execute command

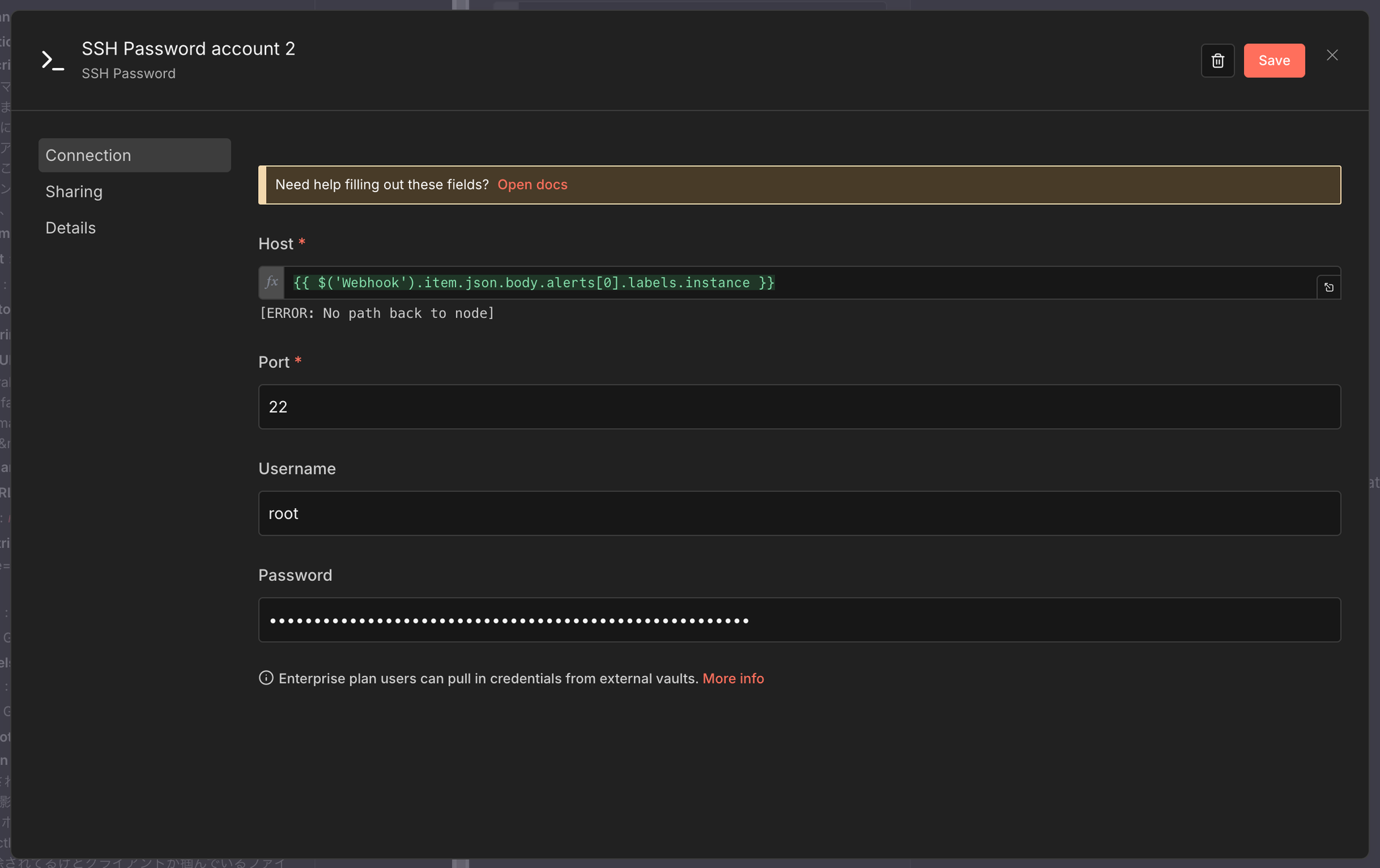

The machine you SSH to can also be used dynamically.

This specifies the instance in the label received by the webhook.

All that's left to do is call the command output by the AI Agent Node using a variable.

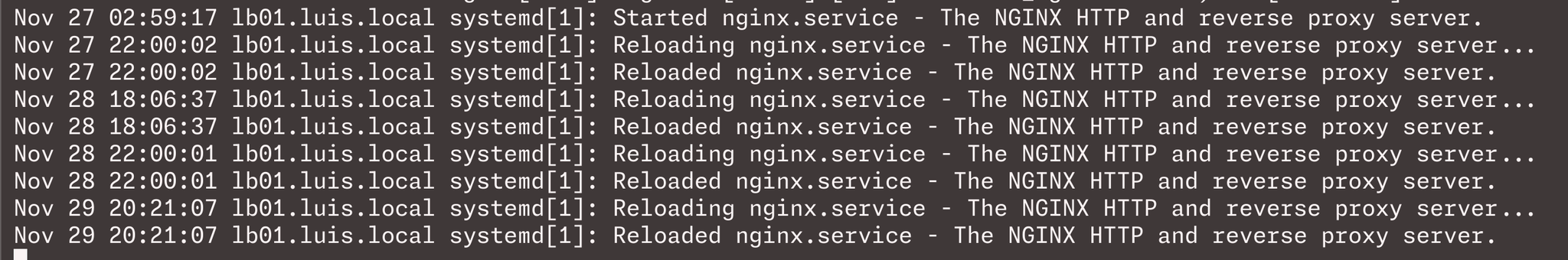

3. Operation verification

I actually tried to get Grafana to issue an alert.

- Alert issuance: Grafana detects the downtime and sends a webhook to n8n.

- AI analysis: The AI on n8n reads the Description and generates commands.

- Automatic recovery: n8n executes the above command.

Then I checked to make sure Nginx was restarted.

As a result of the verification, we confirmed that recovery operations can be controlled simply by rewriting the Description in Grafana, without having to prepare fixed scripts in advance.

Considerations and future challenges

Through this verification,「Description = Runbook」This configuration was found to be technically feasible.

This approach has the following advantages:

- Improved maintainabilityIf you want to change the recovery logic, you can just modify the alert description in Grafana instead of modifying the code.

- Eliminating personalization: Procedures are clearly written and automatically executed, eliminating the discrepancy between documentation and reality.

On the other hand, several issues have come to light regarding practical operation.

- Safety (risk of hallucination): There is a risk that the AI will accidentally generate destructive commands (e.g., deleting data).

To apply this in a production environment, guardrails such as "only allow read-only commands" and "require human approval via Slack before execution" are necessary.

- Permissions Management: You must narrow the user permissions appropriately to run commands from n8n.

summary

By combining Grafana, n8n, and AI, we were able to build an automated recovery workflow that leverages the alert description as a dynamic runbook.

The approach of having AI generate commands has the potential to dramatically improve the efficiency of routine operational tasks.

In the future, we plan to continue verifying the design of safer execution flows and their application to complex failure response scenarios.

SRG is looking for people to work with us.

If you are interested, please contact us here.