Streamlining Kubernetes Pod Communication with Cloudflare Zero Trust and CoreDNS

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

This article summarizes, from an operational perspective, how Cloudflare Zero Trust and CoreDNS can be used to solve the structural issues surrounding the "outbound exposure" and "inbound connections" of applications running on Kubernetes.

Why is it necessary to reconsider "Pod communication" now?Organizing the overall structure: Outward exposure and inward connectionIssue 1: Difficulty in publishing PodsTraditional Method: Strengths and Weaknesses of Ingress + ALB/GCLBLoad Balancer is expensiveThe problem of where to place the authentication layerIssue 2: Difficulty in connecting Pods inwardThe Convenience and Darkness of Port ForwardMulti-cluster/multi-account is even worseSolution: Leveraging Cloudflare Zero TrustThe solution to outward publishing: Access Application + TunnelConfiguration ExampleDesign considerationsComparison with ALBResolving inbound connections: Tunnel + WARP + Resolver Policy + CoreDNS1. Make Pod/Service IP reachable (Tunnel + WARP)2. Make the Service FQDN resolvable via DNS (CoreDNS fixed IP + Resolver Policy)CoreDNS fixed IP addressSplit TunnelResolver PolicyMulti-cluster support: Virtual Network × FQDN with environment nameComparison with Port Forward: How different are the structures?Conclusion

Kubernetes is an incredibly powerful platform, but as you use it, certain "just painful" aspects gradually emerge.

It's not the type of accident where the cluster suddenly breaks down one day,

- A mechanism that slowly increases costs without you even realizing it

- A flow that forces developers to perform ritualistic tasks

- Configurations that easily disrupt the balance between security and convenience

It's the kind of problem that has a silent effect.

This article aims to unravel these "pains that accumulate in daily operations" at the network design and access control layers, and resolve them at the configuration level.

Why is it necessary to reconsider "Pod communication" now?

When you first start using Kubernetes, this is all you need.

- Outbound publishing is Ingress + ALB/GCLB

- Incoming access is kubectl port-forward

- If authentication is required, add Basic Auth or OAuth2 Proxy.

All of these are common configurations seen in documents and blogs, and there is nothing wrong with them.

However, things start to get a little awkward as the number of services and clusters starts to grow.

- The number of LBs has increased too much, causing fixed costs to gradually pile up.

- Implementing authentication into development tools is difficult, and you end up using Basic Auth

- There are so many port forwards that it becomes difficult to know which terminal is currently connected to which.

- As multi-cluster/multi-account environments become commonplace, connecting to development environments becomes a ritual.

None of these will break any particular functionality.

But it also creates a slower developer experience and blurs the lines between security and operations.

This article is about how we reconstructed the pain we had accumulated by focusing on Cloudflare Zero Trust.

Organizing the overall structure: Outward exposure and inward connection

The issues addressed in this article can be divided into two categories:

- The complexity of exposing Pods

- Load Balancer Cost Issues

- Authentication layer design issues

- The hassle of connecting inbound to Pods

- Port Forward Limits

- Difficulty in developing in a multi-cluster/multi-account environment

In this article, we will organize the challenges in the order of "outward facing" → "inward facing," and then look at how we redesigned them using Cloudflare Zero Trust + CoreDNS.

Issue 1: Difficulty in publishing Pods

Traditional Method: Ingress + ALB/GCLB: Strengths and Weaknesses

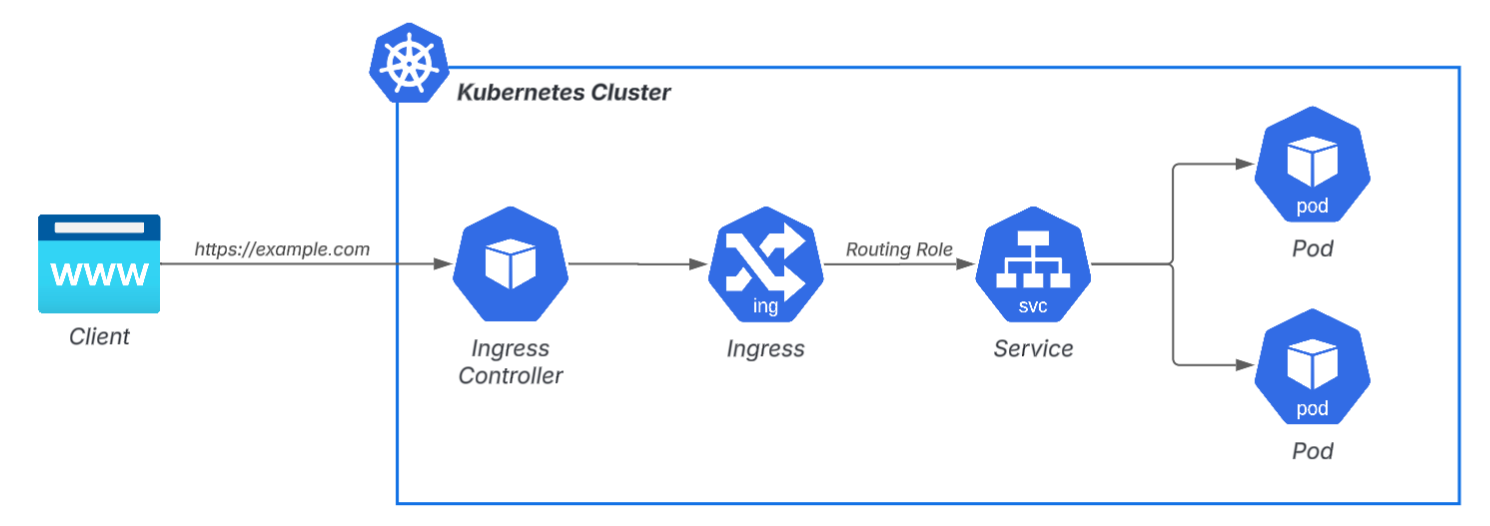

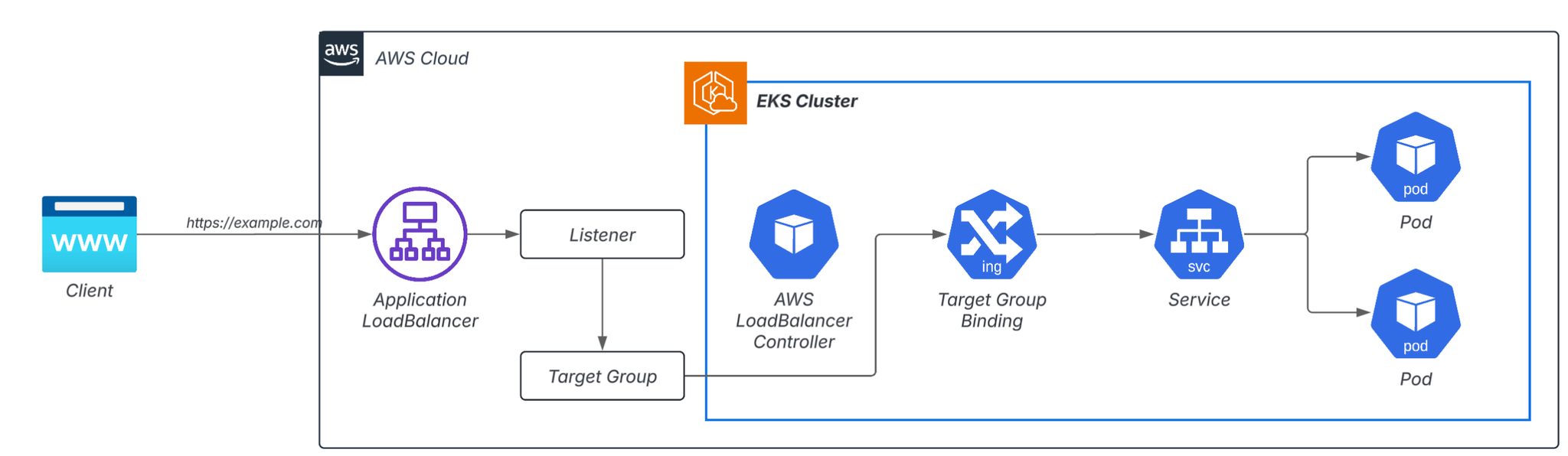

When exposing HTTP externally in Kubernetes, the most commonly used method is Ingress + managed LB (ALB/GCLB).

This system itself is excellent in terms of functionality and stability, but the following two points can cause problems in the field.

Load Balancer is expensive

As the development team grows and the number of applications increases, the number of LBs increases exponentially.

- Settings UI (internal tool)

- Verification app

- Metrics Dashboard

- API Staging

The more services you want to publish, the more LBs you need. If you create an ALB every time you use a tool that only a few people use, you'll eventually end up with dozens of ALBs across your environment. While the cost of a single LB isn't large, it's a fixed cost that can be multiplied by the number of LBs.

Trying to save money

- Listener Rule Aggregation

- Port Forward operation without ALB

- Directly exposing a NodePort

These are some of the ideas that have been put forward, but all of them have strong side effects on operations and UX, and do not provide a fundamental solution.

The problem of where to place the authentication layer

Another problem is where to perform authentication.

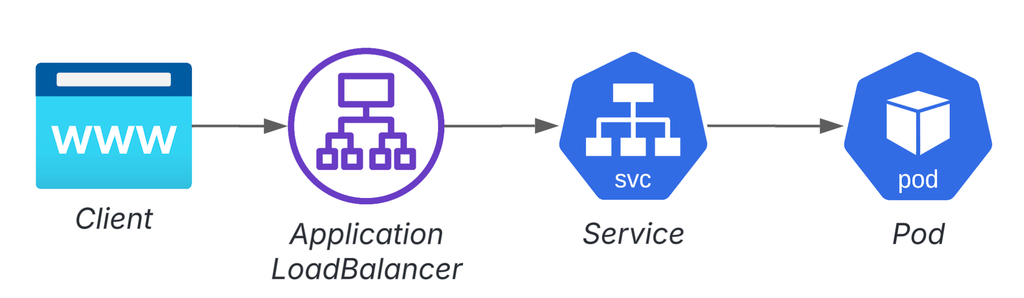

Example without authentication layer:

Where to place the authentication layer: Example 1: Authenticating the app itself

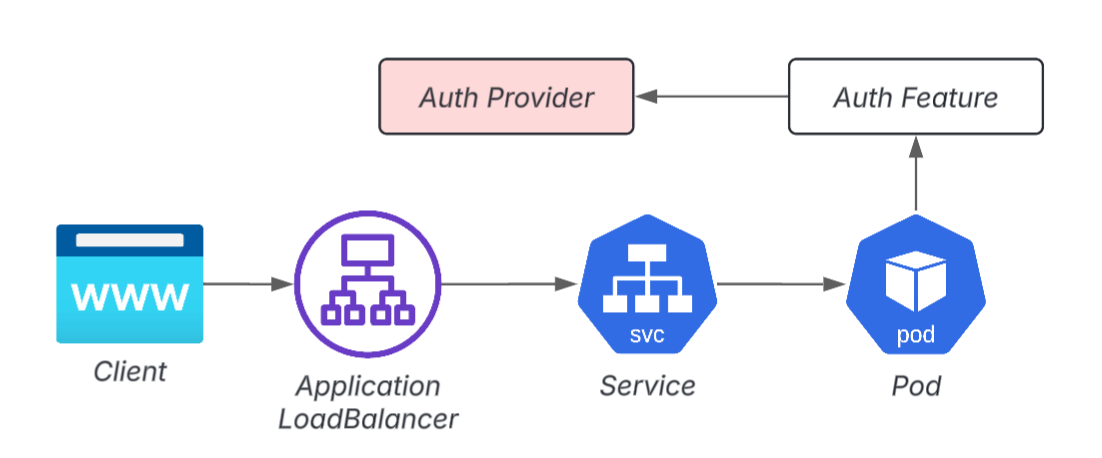

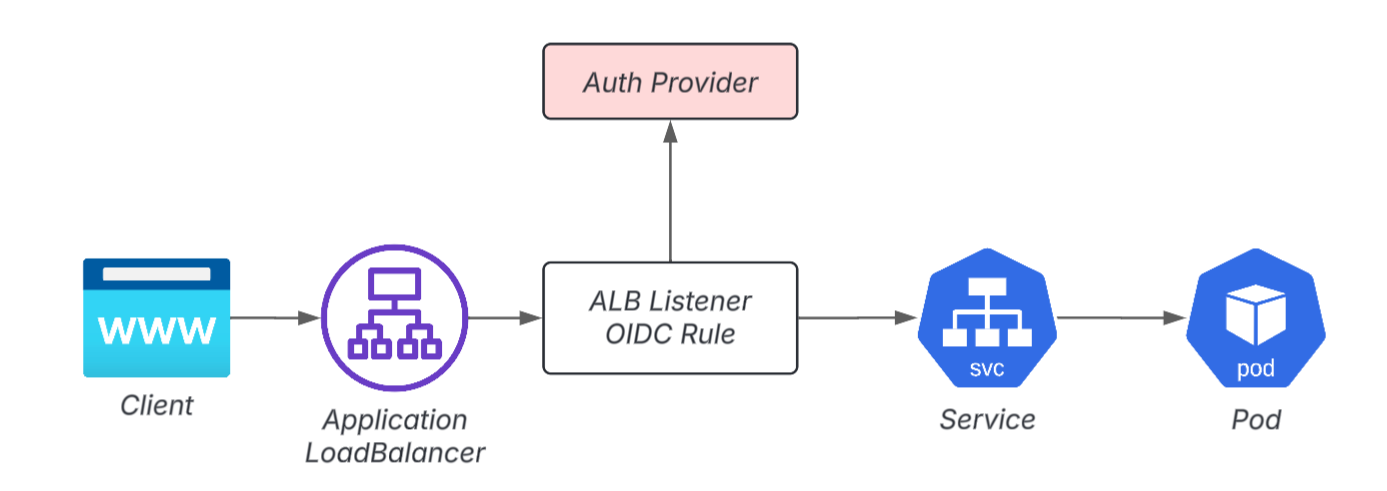

Example 2: Placement of authentication layer in front of Pod (ALB)

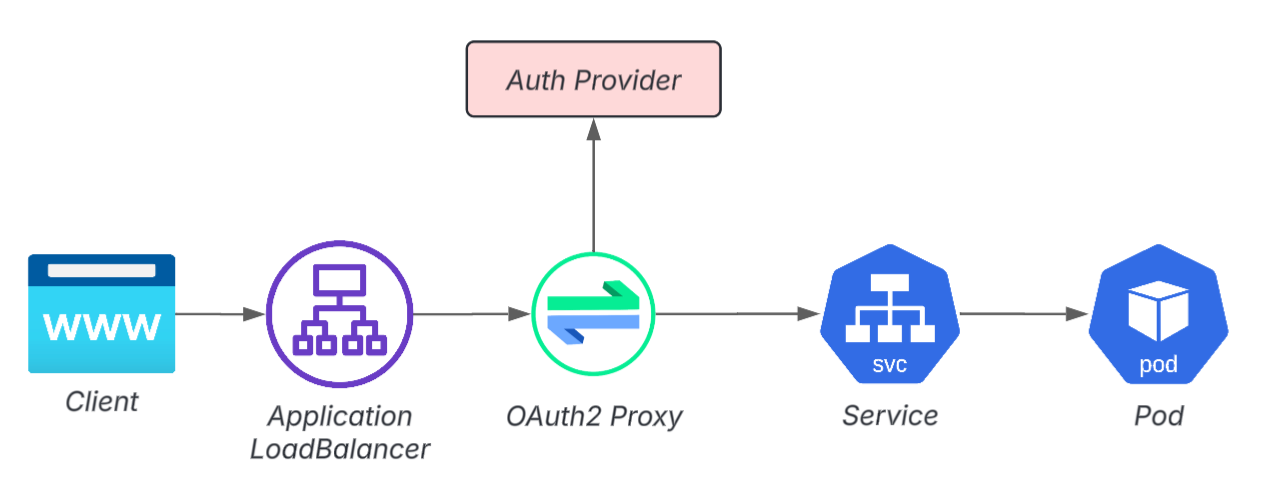

Example 3: Pod front end (OAuth2 Proxy)

In fact, authentication for development and internal applications is extremely difficult.

- Adding authentication functionality to an app is often difficult due to technical debt.

- Although it may seem convenient to set up OIDC authentication in ALB, it can complicate OIDC configuration, role management, and IaC.

- Adding an OAuth2 Proxy adds another layer to the configuration

Furthermore, in many cases, users of published apps are external partner companies, so it is not uncommon for environments to be unable to unify to SSO.

As a result, "Basic Auth operation" has become widespread, and even internal applications tend to fall into an unhealthy security state.

Issue 2: Difficulty in connecting Pods inward

The Convenience and Darkness of Port Forward

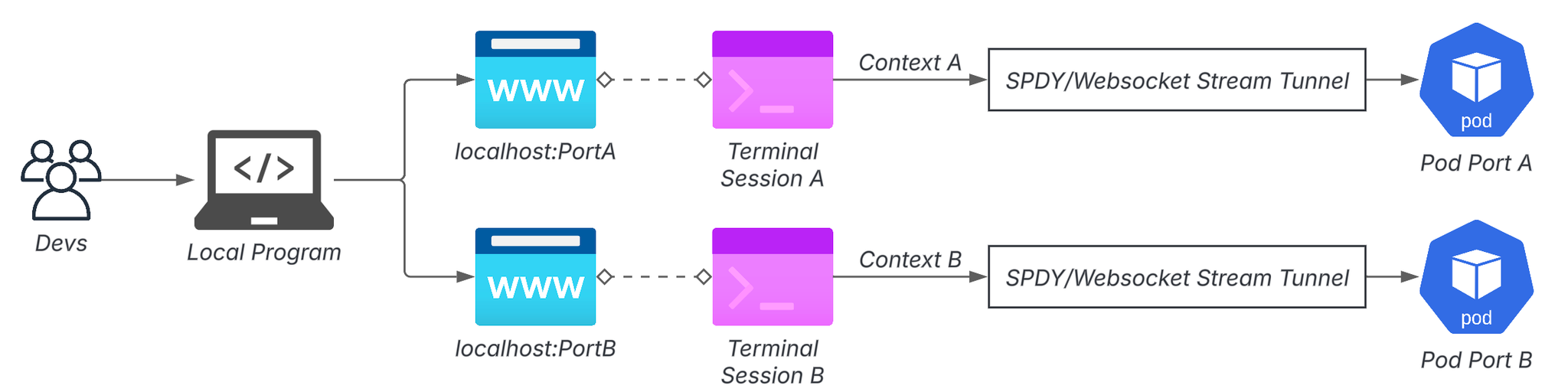

kubectl port-forwardHowever, the moment you start using it too much, your workflow starts to break down.

- The moment the terminal is disconnected, the connection also disappears.

- In a multi-cluster environment, multiple sessions must be maintained, and you must keep track of which terminal is connected to which pod.

- The same port number conflicts, so management is surprisingly difficult.

When it gets to the point where you start development by placing multiple port forwards, it becomes a ritual rather than a tool.

Multi-cluster/multi-account is even worse

As organizations grow and the number of clusters and accounts increases, things become even more complicated.

- Dev / Stg / Prod are separate clusters

- AWS accounts are also separate for each product.

- Due to SaaS integration, there are cases where "this function is only available in Stg"

As a result, developers find themselves switching VPNs, accounts, contexts, etc. Before they know it, the network and terminal ritual begins before they can even begin development. When this process piles up, it's no wonder some people start to feel like multi-cluster, multi-account local development is nearly impossible.

Solution: Leveraging Cloudflare Zero Trust

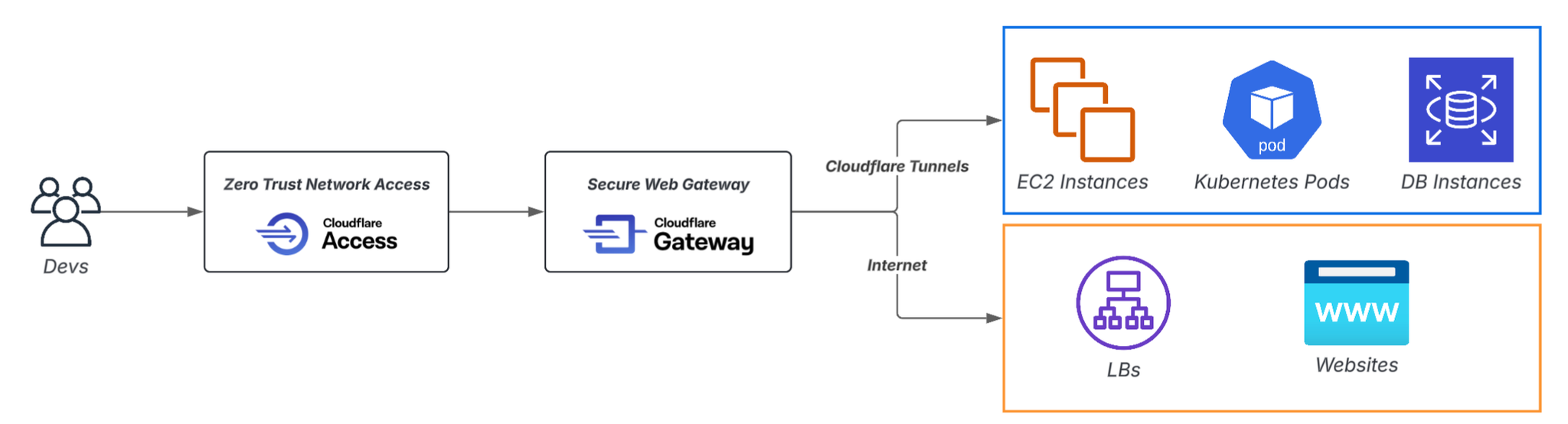

Cloudflare Zero Trust is primarily thought of as a way to protect SaaS and internal web apps, but when combined with Kubernetes it allows for a "fundamental redesign of pod communication."

Cloudflare's key features include:

- Access: Authentication + Zero Trust Access

- Access Application: A unit for controlling access to each DNS record.

- Access Policy: A policy that works in conjunction with Access Application to control access.

- Tunnel: A mechanism that creates a secure connection path from your internal network to Cloudflare

- WARP: Client that connects to the Tunnel

- Gateway: Various control functions based on Secure Web Gateway

- Resolver Policy: DNS routing control

- Network Policy: L4 layer routing control

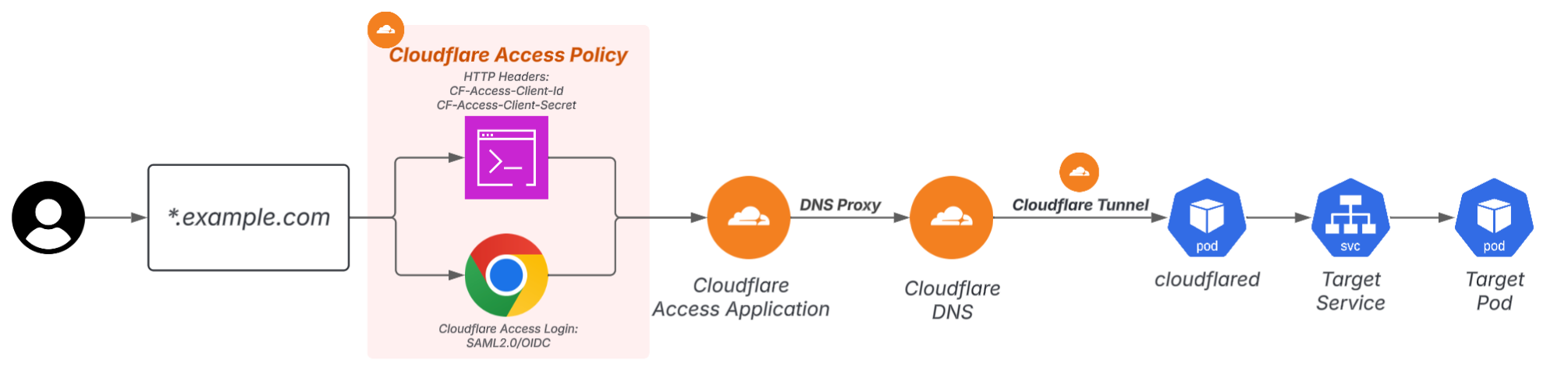

The solution to outward publishing: Access Application + Tunnel

By configuring Cloudflare Tunnel from within the cluster and protecting public applications with Cloudflare Access,

- Pods can be published without creating a load balancer

- DNS/certificates/authentication can be centralized on the Cloudflare side

- Authentication methods can be separated from the app and unified

This has the following advantages:

The advantage of this configuration is that even if the number of apps you want to publish increases, the number of LBs does not increase. It is not the ALBs that increase, but the Access Applications on Cloudflare.

The Auth method will also be unified to Access, so you can stop the proliferation of Basic Auth. If you specify Http Headers in addition to Access Login for human users,SutouAn API Key method is also provided that can be used.

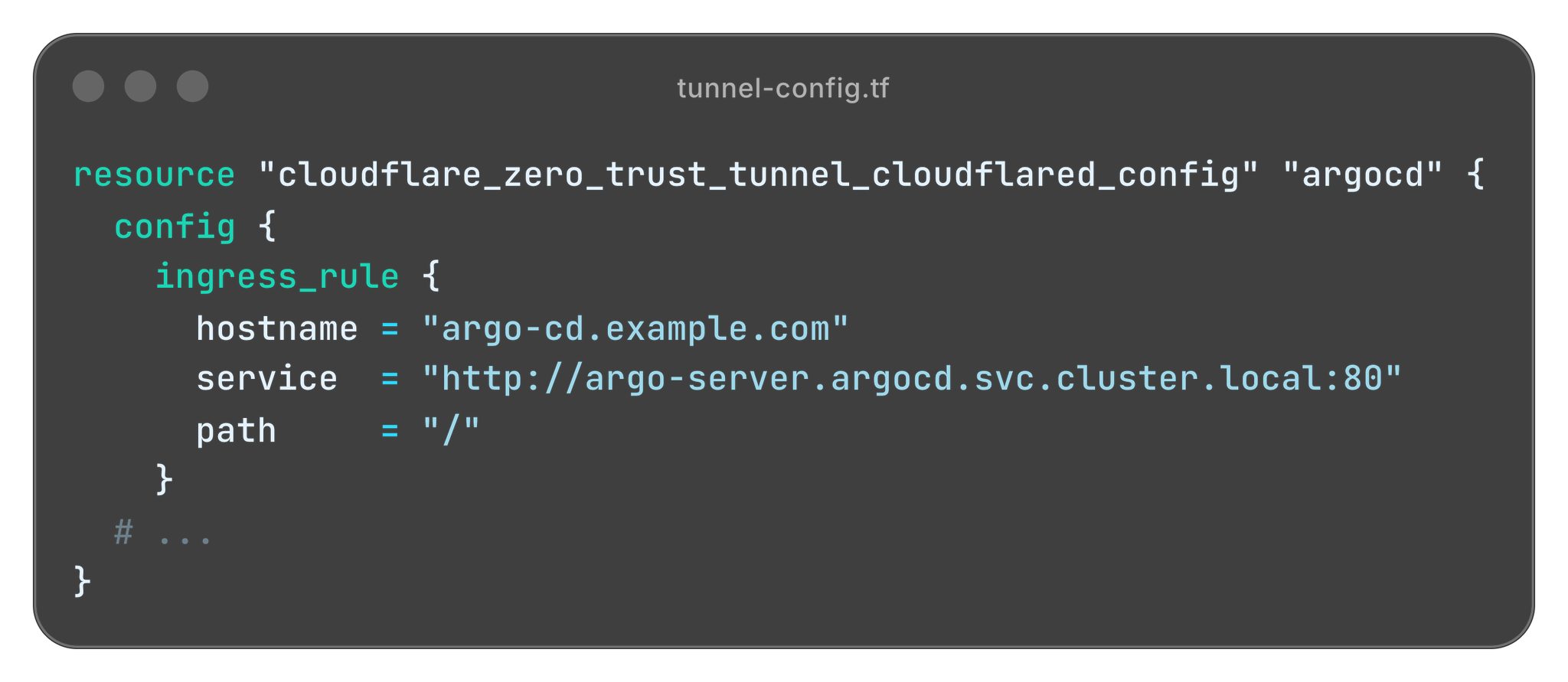

Configuration Example

For example, if you want to expose the ArgoCD UI directly to the Internet without going through an ALB, follow the steps below.

- Place cloudflared on the Kubernetes cluster running ArgoCD and create a Tunnel.

argocd.example.com

- Publish the ArgoCD K8s Service on the Tunnel and register a DNS record.

argocd.example.com

TLS certificates are automatically managed by Cloudflare within Cloudflare's DNS Proxy and Access.

Inside the Tunnel (between Cloudflare → cloudflared → Pod) you can leave it as HTTP, which is also attractive as it significantly lowers the hurdle to switching to HTTPS.

Design considerations

1. Cloudflared installation location

Cloudflared must be located on a network that can reach the target Pod.

- If it is not reachable, communication between Cloudflare Edge and Pod will not be established.

- Conversely, if you properly set up Network Policy in cloudflared,

"This Tunnel can only access this network"Multi-stage network control such as

2. Tunnel's default state is "No authentication"

- When you open communication to the target Pod on the Tunnel, a DNS Record will be created under cfargotunnel.com.No authentication required, anyone can access itThis is the situation.

Access Policy → Access Application → Tunnel Config → DNS Record

Comparison with ALB

| perspective | Traditional Method | Cloudflare method |

|---|---|---|

| cost | LB required for each app | No LB required, free for up to 50 people |

| Authentication Layer Placement | Front stage placement required | Centralized management with Access |

| Configuration complexity | IaC at each layer | Cloudflare related Iac |

| Certificate/DNS Operations | Requires ACM/Domain Service management | Automatically managed by Cloudflare |

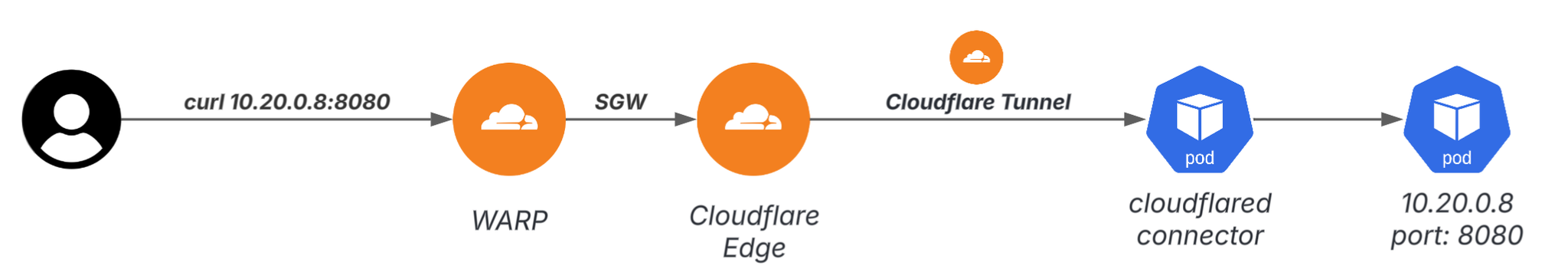

Resolving inbound connections: Tunnel + WARP + Resolver Policy + CoreDNS

To overcome the limitations of Port Forward, you need to create an environment where you can directly access Pods/Services from your local machine.

The method is roughly divided into two steps.

1. Make Pod/Service IP reachable (Tunnel + WARP)

By installing WARP on the user device and connecting the Pod network (within a VPC) to Cloudflare Edge via Cloudflare Tunnel, traffic can be sent directly to the Private IP, just like a VPN.

There is one thing to note here.

In AWS, Pod IP is fine, but Service CIDR is outside the VPC, so Service IP cannot be reached directly from WARP. If you want to access Pod directly, you need to use Headless Service.

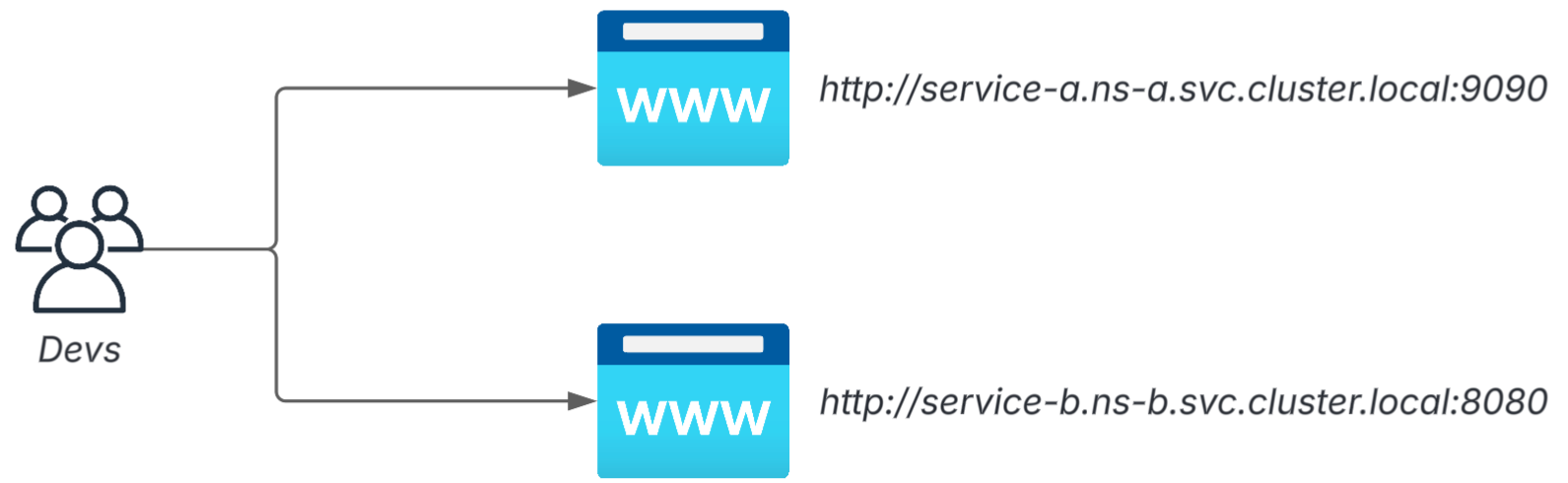

2. Make the Service FQDN resolvable via DNS (CoreDNS fixed IP + Resolver Policy)

*.svc.cluster.localIn other words, if you can query CoreDNS locally, the location of the Pod/Service will be unified to the FQDN.

So,

- CoreDNS static IP address with Internal NLB

.svc.cluster.local

We will create the following configuration.

Then, the WARP ON device will

https://stg-app.namespace.svc.cluster.localYou can access the Pods in that cluster by simply typing the URL.

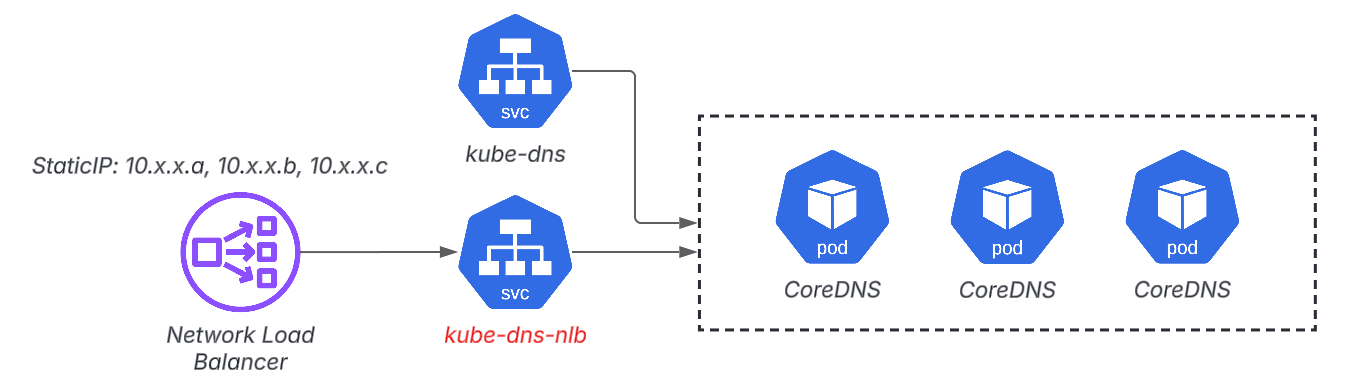

CoreDNS fixed IP address

Static IPUsing this,

kube-dns

kube-dns-nlb

It has a configuration such as:

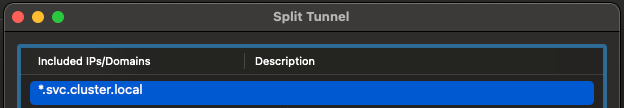

Split Tunnel

*.svc.cluster.local

*.svc.cluster.localResolver Policy

Finally, in the Cloudflare Gateway Resolver Policy:

.svc.cluster.local

- Specify the static IP of the CoreDNS NLB as the NameServer.

- Forward the query as is

Set the following rule.

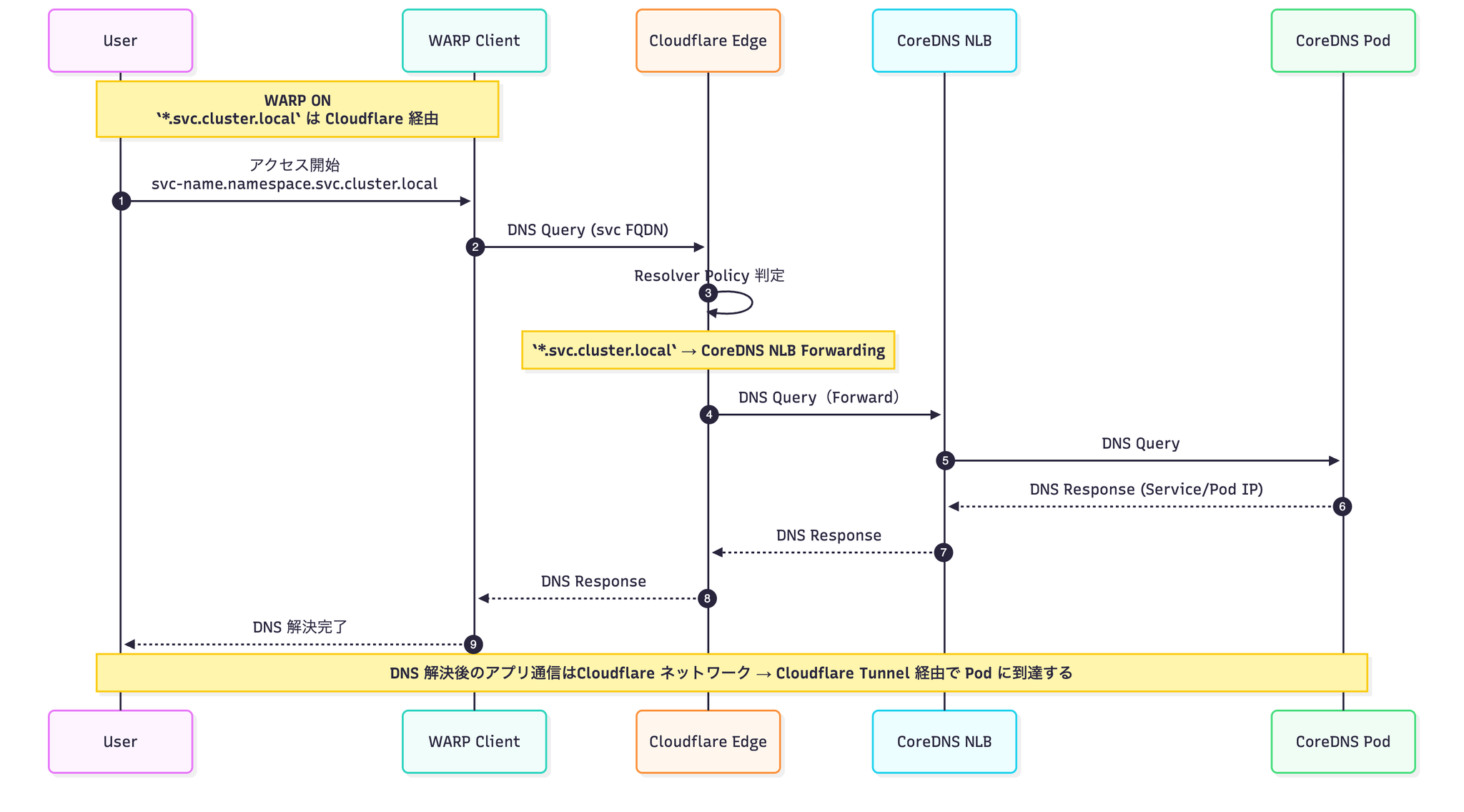

If we were to represent all the elements in one sequence diagram, it would look something like this:

https://stg-app.namespace.svc.cluster.localMulti-cluster support: Virtual Network × FQDN with environment name

As the number of clusters increases, it becomes clearer to include "which cluster" in the URL.

example:

stg-api.platform.svc.cluster.local

prd-api.platform.svc.cluster.local

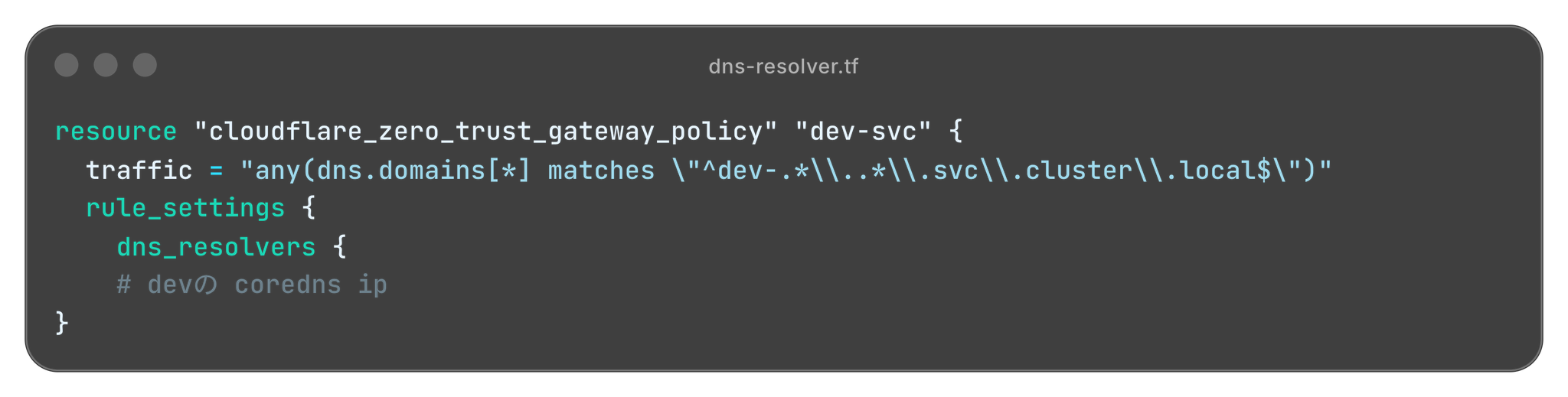

A combination of Cloudflare's Virtual Network and Resolver Policy allows these URLs to be routed to the CoreDNS of the correct cluster.

*.svc.cluster.local<env-name>-<app-name>.<namespace>.svc.cluster.local

It can also flexibly accommodate patterns such as:

A working Terraform example would look like this:

This means that the rules remain the same even for multi-cluster and multi-account environments, and developers can switch between worlds using only the FQDN.

Comparison with Port Forward: How different are the structures?

| perspective | Port Forward | Cloudflare Zero Trust Configuration |

|---|---|---|

| Continuity | Shared Destiny with Terminal | Stable as long as WARP is alive |

| Multi-cluster | context/terminal management hell | Unified access with FQDN |

| Development Experience | Port Re-pasting Festival | Just hit the URL |

The key is to change the configuration to avoid the concept of port forwarding, rather than trying to make port forwarding more convenient.

Conclusion

What I like most about this configuration is that

We are currently using Cloudflare not as a CDN or DDoS protection tool, but as a "communication control infrastructure for Kubernetes."

Zero Trust is not simply a system that creates a "gate from outside the company to inside the company."

- Reorganize Pod communication patterns

- Unifying developer access paths

- Authentication, authorization, and encryption all in one place

By reviewing things from these perspectives, we feel we can streamline both Kubernetes' "external exposure" and "internal connections" at the configuration level.

Using Cloudflare Zero Trust in this way:

It goes beyond being a "simple replacement" for Ingress or VPN and serves as an option for a new operational architecture.

I hope this method will help you improve your Kubernetes operations and developer experience.

If you are interested in SRG, please contact us here.