Chaos Engineering Best Practices on Google Cloud - Fault Injection

My name is Ohara and I work in the Service Reliability Group (SRG) of the Media Headquarters.

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

This article introduces chaos engineering at Google Cloud and how to practice fault injection using the Chaos Toolkit and LitmusChaos.

IntroductionChaos Toolkit Outage ExperimentLitmusChaos Disruption Experimentenvironmental preparationChaosToolkit - Hands-onChaosToolkit - ChallengesTask 1: How to dynamically obtain setting valuesTask 2: Dynamic acquisition of load balancer configuration elementsLitmusChaos - Preparing the execution environmentLitmusChaos - PracticeLitmusChaos - ChallengesChallenge 1: Managing multiple experiment typesTask 2: Executing a workflow and passing parametersConclusion

Introduction

We will introduce chaos engineering using the Chaos Toolkit and LitmusChaos.

First, we explain the types of failure experiments used with each tool.

Chaos Toolkit Outage Experiment

Inject a fault into the load balancer. It is a good idea to specify parameters when running the fault test so that you can identify the scope of the impact.

| Fault Type | Fault Overview | Considerations to limit the scope of impact |

| inject_traffic_delay | Latency degradation | FQDN, Path |

| inject_traffic_faults | Worsening error rate | FQDN, Path |

LitmusChaos Disruption Experiment

We will inject a failure into GKE. Regarding the scope of impact, it would be good to be able to specify Namespace, Label, etc. for Pod failures. Also, for network failures, you can specify additional IPs and hosts, which can be used as parameters.

| Fault Type | Fault Overview | Considerations to limit the scope of impact |

| pod-delete | Pod deletion | NS, Label |

| pod-cpu-hog | Pod CPU load | NS, Label |

| pod-memory-hog | Pod Memory Load | NS, Label |

| pod-network-latency | Pod NW latency deterioration | NS, Label, Destination IP, Host |

| pod-network-loss | Pod NW error rate worsens | NS, Label, Destination IP, Host |

| node-restart | GKE Node restart | AZ, Label |

| node-cpu-hog | GKE Node CPU load | AZ, Label |

| node-memory-hog | GKE Node Memory Load | AZ, Label |

environmental preparation

Let's set up the following tools that we will use this time.

- gcloud

- helm

- argo cli

- Python

- chaostoolkit

- chaostoolkit-google-cloud-platform

ChaosToolkit - Hands-on

Experiment definitions are managed in JSON.

Below is a sample for testing LB error rate deterioration failures. This experiment rewrites the Route rules definition of the LB URL Map resource. You can define the setting value in the configuration or obtain it from an environment variable. As explained in detail below, it is also possible to obtain the value dynamically and use it as the setting value.

There are three (four) important components.

- configuration

- Define the setting value as a variable

- method

- Definition of the execution part of fault injection (multiple definitions possible)

- rollbacks

- Definition for restoring the state to its original state after the fault injection execution time has elapsed (multiple definitions possible)

- steady-state-hypothesis

- Definition to ensure the target is functioning properly

- option

Run this experiment (I run it via uv because I manage packages with uv).

--var-filechaostoolkit.logThe latency degradation experiment can be achieved by defining it in a similar way.

ChaosToolkit - Challenges

We will introduce the challenges we encountered in implementing the experiment and how we solved them.

Task 1: How to dynamically obtain setting values

Regarding how to set dynamic values in the definition file, we considered using a template engine to combine variable expansion with processing outside of ChaosToolkit, but wanted to avoid compromising the readability of the JSON too much, so we explored a way to dynamically obtain values using the functions of Chaos Toolkit itself.

So I used a feature in ChaosToolkit called variable substitution.

Here's an excerpt from the definition:

get-url-map-target-name-probeThis makes it possible to automatically configure experiment settings according to the environment, reducing the effort required to manage multiple configuration files for each environment.

Task 2: Dynamic acquisition of load balancer configuration elements

When conducting a fault injection experiment on a load balancer, it was necessary to specify the target URL map name and path matcher name in the experiment definition.

These names are automatically generated resource names by Google Cloud, so manually identifying them can be time-consuming and prone to human error.

actions/url_map_info.pyThe definition file extracted in Exercise 1 above contains the settings for running the script.

The required information can be dynamically obtained by executing the following in the script:

- DNS resolution of the IP address from the entered domain name

- Obtain information linked to the Frontend from the acquired IP address

- Parse the information and get the required URL map resource

- Get URL map name and Path matcher name

This means that when you run a failure test, you only need to specify the domain name, without having to worry about complex resource names.

LitmusChaos - Preparing the execution environment

Before we get started, we'll explain the LitmusChaos execution environment, which we briefly touched on in the tool selection section. Litmus has two types of planes: the Control Plane and the Execution Plane. Strictly speaking, the final execution environment will work with just the Execution Plane, but we'll explain as we build it overall.

Deploy the Control Plane using helm.

Since we will deploy only the minimum necessary components, adjust the parameters in values.yaml.

values.yaml

The frontend is the WebUI component.

Access the frontend with the configured user/password and deploy the Execution Plane as per the following document.

Download the manifest and run kubectl apply.

Now you have created a running environment for Litmus.

LitmusChaos - Practice

From here, we will explain the mechanism for actually injecting faults and put it into practice.

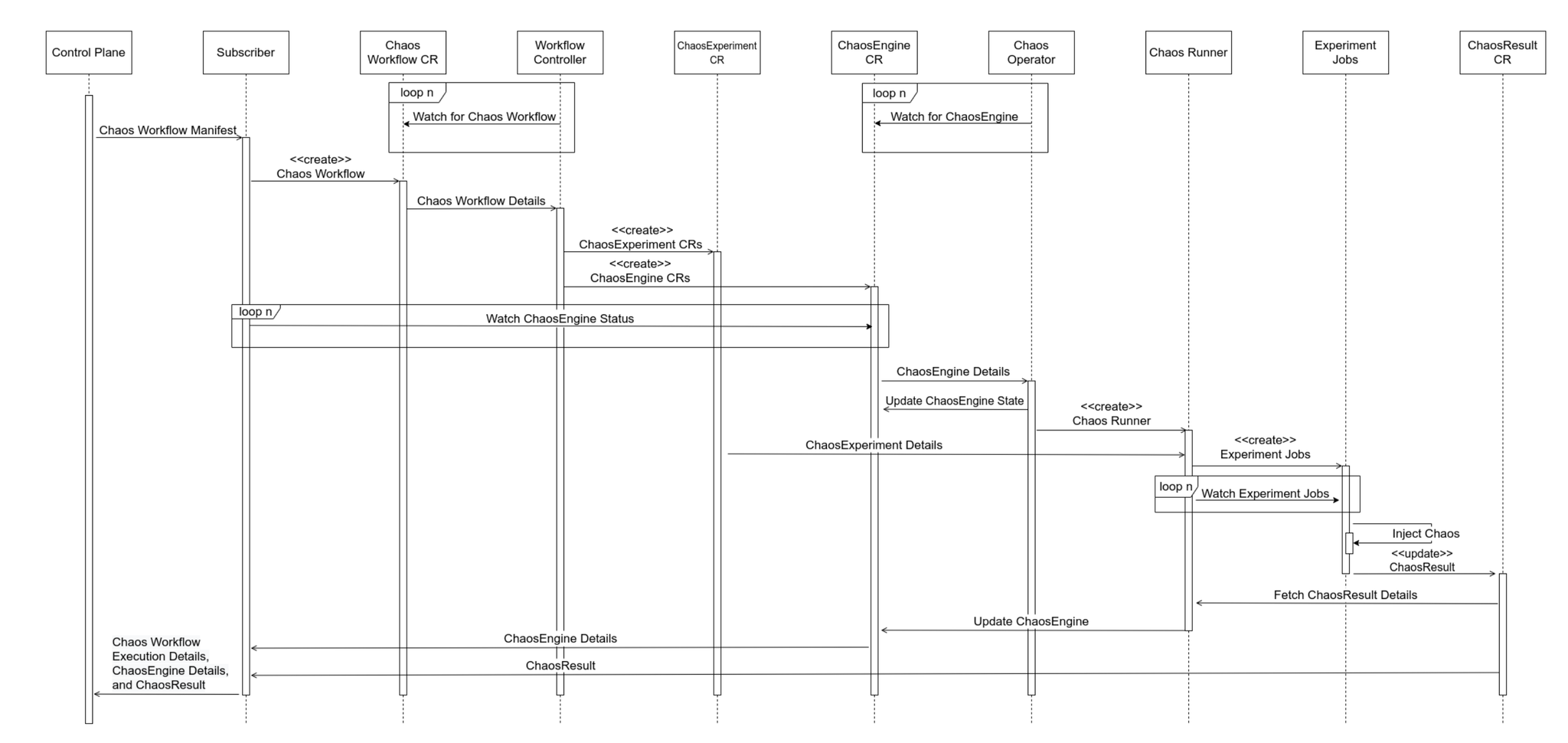

The sequence diagram below shows that the deployment of the Chaos Workflow custom resource triggers the fault injection process.

The workflow is written in Argo Workflow format and involves defining and applying two types of custom resources.

- Chaos Workflow CR

- ChaosExperiment CR

- ChaosEngine CR

Sample workflow: pod-delete

This is a workflow that was created simply by selecting the Add Experiment > pod-delete template from the WebUI. After creating it, download the manifest.

Let's try running the above workflow. This time, we'll perform a failure test to delete the unused chaos-exporter. By the way, this workflow has an ID specific to the Litmus execution environment, so even if you copy and paste it as is, it will not work in other environments. Please apply the workflow you created in your own environment.

When you apply the workflow, you will see that three custom resources have been created and multiple Pods are running. If something is not working properly for some reason, check the status of these resources.

CompletedThis is an advanced version.

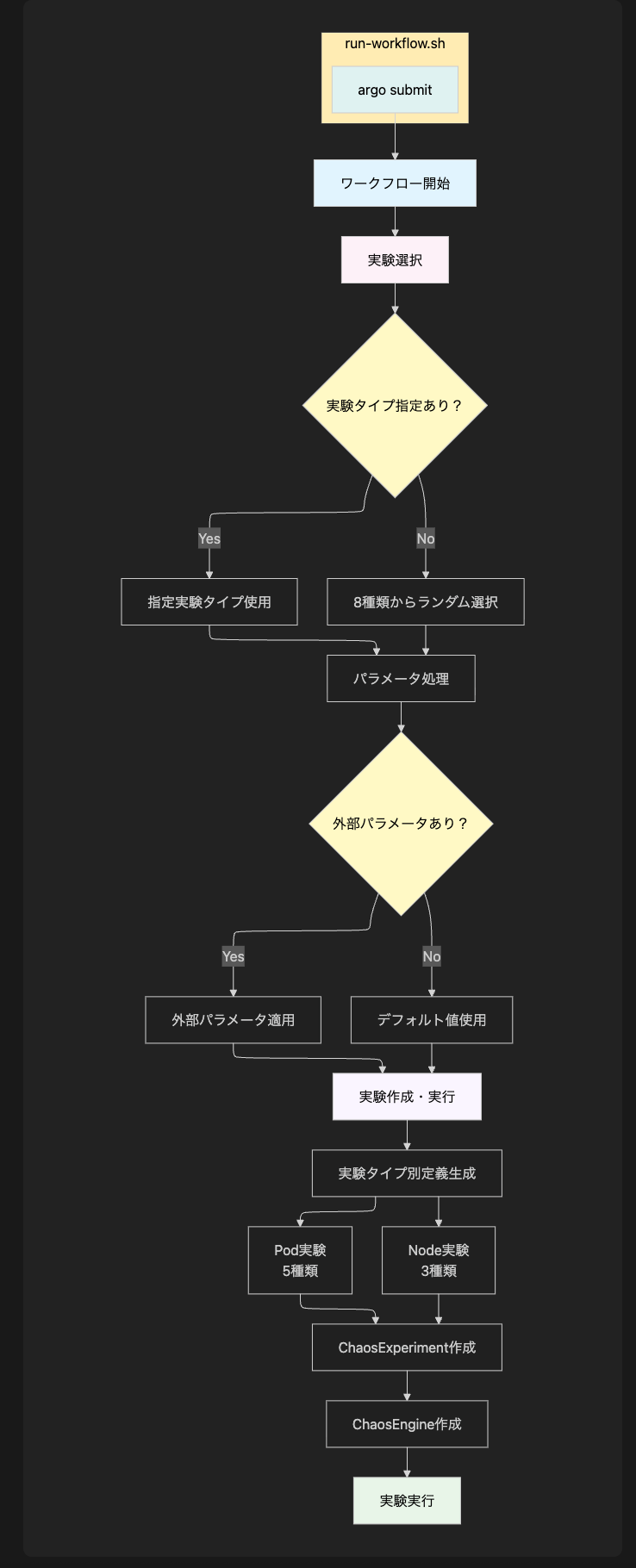

At the beginning, I introduced the fault test items to be performed with LitmusChaos, and now I will implement a workflow to inject these faults. I implemented it in one workflow. Splitting the workflow into files could result in writing similar processes repeatedly, so I have combined them into one. The YAML file would be huge, so I will explain it in a flow diagram like this.

--waitLitmusChaos - Challenges

Challenge 1: Managing multiple experiment types

run-workflow.shTask 2: Executing a workflow and passing parameters

Originally, running a workflow via script was quite inefficient because it required internal processes such as replacing workflow parameters with the sed command, deploying with kubectl apply, and waiting while the workflow was running.

- A complex flow: rewrite template file → kubectl apply → wait for execution completion → manual result confirmation

- Risk of string substitution errors due to lack of awareness of YAML format

- Template file conflict issue when running simultaneously

I had a lot of problems, so I switched to workflow control using argo cli. This has made writing scripts and workflows much simpler.

Conclusion

In this article, we introduced a practical example of chaos engineering using two tools, ChaosToolkit and LitmusChaos, and concrete solutions to the challenges faced in the process.

In addition to simply introducing tools, by making repeated improvements such as developing scripts to address operational issues, tweaking parameters, and reviewing configuration management, it becomes possible to reduce human error and practice chaos engineering in a safer and more reproducible manner.

ChaosToolkit's strengths lie in its flexible experiment definition through declarative descriptions, while LitmusChaos' strengths lie in its Kubernetes-native integration and rich experiment set.

We hope that these examples will be helpful to those who are looking to tackle chaos engineering and improve the reliability of their systems.

If you are interested in SRG, please contact us here.