Which Valkey Managed Service Should I Choose? AWS (ElastiCache/MemoryDB) vs Google Cloud (Memorystore)

This is Kobayashi (@berlinbytes) from the Service Reliability Group (SRG) of the Media Headquarters.

#SRG(Service Reliability Group) is a group that mainly provides cross-sectional support for the infrastructure of our media services, improving existing services, launching new ones, and contributing to OSS.

This article will examine the differences between Valkey managed services, their features, and how to use them using benchmarks.

IntroductionLoad test preparationThe instance that applies the loadValkey, the one being put under pressureTesting MethodologyLoad test resultsInstanceValkey 7.2Valkey8.0ConsiderationpriceConclusion

Introduction

We offer services on multiple public clouds, including private clouds.

When developing a new service, selecting a platform to serve as the main base can be easy if there are functions that can only be realized on that platform.

However, recently, each company has been providing the desired functions.

In this situation, the next important thing is to balance cost and performance.

This time, I would like to examine the differences between platforms and services using Valkey, which was forked from Redis due to restrictions on commercial use being imposed in 2024.

As a comparison with Valkey, it is a managed service.

- Amazon ElastiCache for Valkey

- Amazon MemoryDB for Valkey

- Google Cloud Memorystore for Valkey

I would like to focus on the following.

In AWS, in addition to ElastiCache, which is optimized for use as a cache in front of other databases, there is MemoryDB, which has enhanced availability and persistence and can be used as a single primary database. Both can use the Valkey engine, but there are differences in the versions available: ElastiCache is 7.2/8.0, while MemoryDB is 7.2/7.3 (as of April 2025).

Google Cloud offers the option to adjust the number of shards, replicas, and tiers, allowing it to achieve the same functionality as AWS with just one Memorystore service.

This also uses the Valkey engine (7.2/8.0).

Also, since this is a good opportunity, I decided to apply load from the latest Arm instance and do a quick comparison of their benchmarks.

Load test preparation

The instance that applies the load

Recently, the use of cost-effective Arm-based Graviton processors has become commonplace at AWS.

The latest Graviton 4 processor is now available as the 8G generation.

Google Cloud will also be offering C4A machine type instances powered by Arm-based Axion processors, which will be generally available from October 2024.

On the Google Cloud side, we'll try using the Axion processor, which will be available in the Tokyo region from around the end of March 2025.

And on the AWS side, we'll try using the Graviton 4 processor, which is based on the Arm Neoverse-V2 design, just like Axion.

Both AWS and Google Cloud have the same instance sizes/machine types, and the series is determined by the vCPU to memory ratio.

Here's a simple table showing this.

| vCPU : Memory | AWS | Google Cloud |

| 1 : 2 | Compute Optimized c8g | highcpu c4a-highcpu |

| 1 : 4 | General Purpose m8g | standard c4a-standard |

| 1 : 8 | Memory Optimized r8g | highmem c4a-highmem |

This time, I would like to use a balanced 1:4 ratio with 2 vCPU cores.

| Provider | AWS | Google Cloud |

| Instance type | m8g.large | c4a-standard-2 |

| Number of CPU Cores | 2core | 2core |

| memory | 8.0 GiB | 8.0 GB |

Valkey, the one being put under pressure

On the AWS side, there are no regions where the 8g generation node type can be selected yet, and it appears that the CPU generation of node types has not been made public on the Google Cloud side either, so we decided to match the specifications with the same number of vCPUs and memory on both sides and make a comparison.

In this case, we will compare something with 2 vCPU cores and 13 GB of memory.

I'll be comparing Valkey versions ElastiCache 7.2/8.0, MemoryDB 7.2, and MemoryStore 7.2/8.0.

I'd like to test it again once MemoryDB 8.0 becomes available.

To summarize, the following is the case.

| Provider | AWS | AWS | Google Cloud |

| service | ElastiCache for Valkey | MemoryDB for Valkey | Memorystore for Valkey |

| Instance type | cache.r7g.large | db.r7g.large | highmem-medium |

| Number of CPU Cores | 2core | 2core | 2vCPU |

| memory | 13.07 GiB | 13.07 GiB | 13 GB |

| Region/Zone | Single Region | Single Region | Single Zone |

| Number of shards | 1 | 1 | 1 |

| replica | none | none | none |

| engine | Valkey 7.2 / 8.0 | Valkey 7.2 | Valkey 7.2 / 8.0 |

Testing Methodology

- First, to get a rough idea of the processing power of the instance that will be loaded,CoreMarkThe score is measured. CoreMark is often used as an index for evaluating the performance of a single CPU.HereVarious CPU scores are published on.

CoreMark parameters

- Next, we fill the managed Valkey instance with data by about 30-40%. This is because there are few services that operate in an empty state, and running the benchmark with no data tends to produce good results, so we want to get benchmark results that are more in line with actual operations.

- Next, within each public cloud, load is applied from an instance in the same region and zone.memtier_benchmarkBenchmark will be performed on.

- Three types of workloads (Write Only / Read Only / Read:Write=1:1) are benchmarked for five sets of three minutes each.

memtier_benchmark parameters

write

reading

Mixed reading and writing

- We compare the number of operations per second and P99 latency for each of the five sets.

Load test results

Instance

| Provider | AWS | Google Cloud |

| Instance type | m8g.large | c4a-standard-2 |

| CPU | AWS Graviton4 Processor | Google Axion Processor |

| Arm IP | Arm Neoverse-V2 | Arm Neoverse-V2 |

| OS | Ubuntu 24.04.2 LTS | Ubuntu 24.04.2 LTS |

| Coremark score | 57405 | 61439 |

Graviton4 appears to have the highest score even among the Graviton series, which is highly rated for its performance-to-price ratio.

As for Axion, it appears to have more than 40% performance improvement compared to instances using the same 4th generation Intel processor.

There is little difference in the on-demand price between the two, but Axion has about 7% higher performance.

This has not been officially stated by either company, but it is thought to be within the range of differences in clock frequency reported on some sites.

(Graviton4 2.7-2.8GHz, Axion 3.0GHz?)

Valkey 7.2

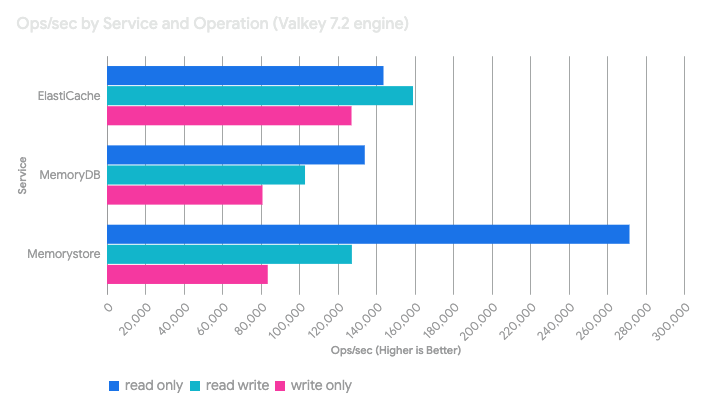

First, here are the results for the Valkey 7.2 engine for three types of workloads (Write Only / Read Only / Read:Write=1:1).

Valkey8.0

And here are the results with the Valkey 8.0 engine.

Consideration

First, in a comparison using the Valkey 7.2 engine, Memorystore showed outstanding read performance compared to the other services.

However, the same trend was not observed for writes, suggesting that Memorystore is tuned to prioritize read operations over writes or mixed read-write patterns. It is ideal for services that are expected to use extremely read-heavy caching.

ElastiCache performed well across all patterns, and showed particularly excellent performance and p99 latency in mixed read-write patterns.

This could be described as the most balanced.

This performance is highly versatile and appears to reflect the original design goals of a caching layer, such as session management, where both reads and writes occur, and caching of frequently accessed data.

On the other hand, MemoryDB appears to be inferior in read/write performance and latency compared to both. However, this is likely a reflection of its original design philosophy.

This is likely because MemoryDB is in a different category: a durable primary database, rather than a cache like ElastiCache or Memorystore.

It achieves durability with a Multi-AZ transaction log, which other services do not, and this seems to be an intended trade-off, eliminating the need for a separate cache + database layer.

When comparing with the Valkey 8.0 engine, ElastiCache showed a slight increase in write performance, but no significant performance improvement was confirmed.

Memorystore saw an increase of around 5% in Ops/sec for all workloads, and P99 latency also improved.

It appears that its tendency to prioritize read operations remains unchanged.

Therefore, it appears that upgrading the engine version may not necessarily lead to simple performance improvements.

Testing under real-world workflows and environments may be essential to confirm the impact on performance.

price

Now, the last thing is cost.

Comparing the cost difference per node used in this study based on on-demand pricing in the Tokyo region,

MemoryDB appears to be about 25% more expensive than ElastiCache.

MemoryStore is about 17-20% more expensive than ElastiCache and 5-11% cheaper than MemoryDB.

| Provider | AWS | AWS | Google Cloud |

| service | ElastiCache for Valkey | MemoryDB for Valkey | Memorystore for Valkey |

| Instance type | cache.r7g.large | db.r7g.large | highmem-medium |

| On-Demand Price (Hourly) | $0.2104 | $0.2597 | $0.247 |

| On-demand price (monthly) | $151.488 | $186.984 | $177.84 |

| Region/Zone | ap-northeast-1 | ap-northeast-1 | asia-northeast1 |

In addition to the instance fee, ElastiCache

- Pricing for data tiering if used

- Network charges (only for communications across AZs and regions)

- Backup fee (if you use backup)

In addition to the instance fee, MemoryDB also charges

- Pricing for data tiering if used

- If you write more than 10TB per month,

- Data transfer charges (when using multi-region)

- Snapshot storage pricing (if you use snapshots)

In addition to the instance fee, MemoryStore

- AOF persistence fee (if you use AOF persistence)

- Network charges (only for communication across zones and regions)

- Backup fee (if you use backup)

will be charged separately.

Conclusion

This concludes our comparison of managed services with the Valkey engine. To summarize the conclusions briefly,

Memorystore seems to be the best choice for read-heavy caching.

For general purpose caching, session stores, and balanced workloads, I think ElastiCache is the way to go.

If data durability and strong consistency are your top priorities, MemoryDB may be the best solution, even if you are willing to trade off write latency and cost.

I would like to compare Valkey managed services on other public clouds and when the engine version is updated.

For a comparison with the Redis engine, please see the detailed article by Kikai, who is on the same SRG team as me.

With Elasticache, we performed testing using on-demand nodes this time, but there is also the option of serverless.

For serverless testing, please see the article by Nakajima from the Applibot SRE team, which provides a good summary.

Please also refer to this.

SRG is looking for people to work with us.

If you're interested, please contact us here.